Artificial Intelligence processor unit. Powerful Quantum AI component on PCB motherboard with data … More

Within the industry, where people talk about the specifics of how LLMs work, they often use the term “frontier models.”

But if you’re not connected to this business, you probably don’t really know what that means. You can intuitively apply the word “frontier” to know that these are the biggest and best new systems that companies are pushing.

Another way to describe frontier models is as “cutting-edge” AI systems that are broad in purpose, and overall frameworks for improving AI capabilities.

When asked, ChatGPT gives us three criteria – massive data sets, compute resources, and sophisticated architectures.

Here are some key characteristics of frontier models to help you flush out your vision of how these models work:

First, there is multimodality, where frontier models are likely to support non-text inputs and outputs – things like image, video or audio. Otherwise, they can see and hear – not just read and write.

Another major characteristic is zero-shot learning, where the system is more capable with less prompting.

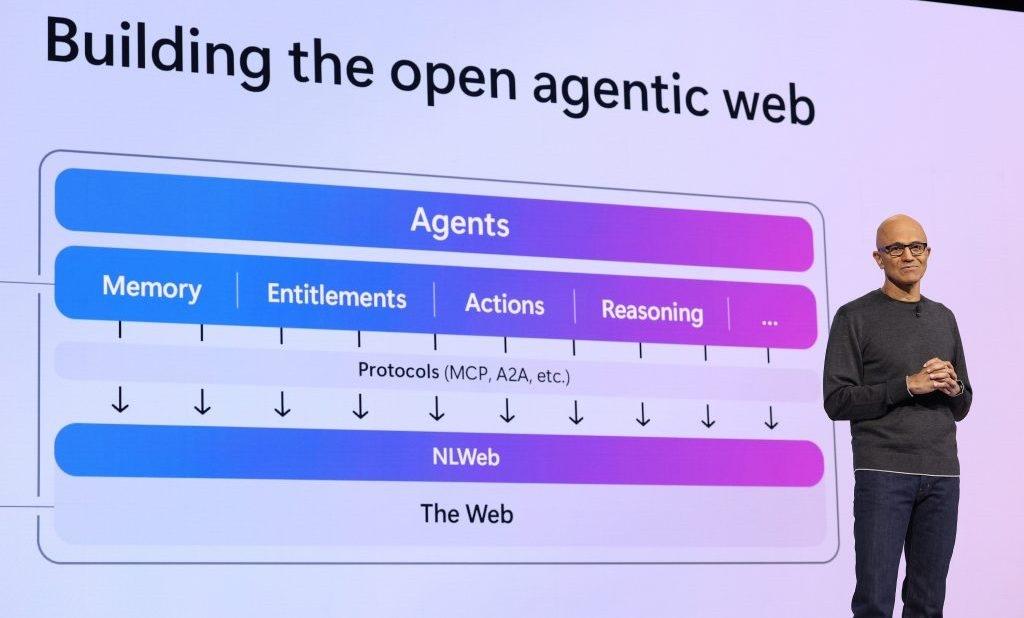

And then there’s that agent-like behavior that has people talking about the era of “agentic AI.”

Examples of Frontier Models

If you want to play “name that model” and get specific about what companies are moving this research forward, you could say that GPT 4o from OpenAI represents one such frontier model, with multi-modality and real-time inference. Or you could tout the capabilities of Gemini 1.5, which is also multimodal, with decent context.

And you can point to any number of other examples of companies doing this kind of research well…but also: what about digging into the build of these systems?

Breaking Down the Frontier Landscape

At a recent panel at Imagination in Action, a team of experts analyzed what it takes to work in this part of the AI space and create these frontier models

The panel moderator, Peter Grabowski, introduced two related concepts for frontier models – quality versus sufficiency, and multimodality.

“We’ve seen a lot of work in text models,” he said. “We’ve seen a lot of work on image models. We’ve seen some work in video, or images, but you can easily imagine, this is just the start of what’s to come.”

Douwe Kiela, CEO of Contextual AI, pointed out that frontier models need a lot of resources, noting that “AI is a very resource-intensive endeavor.”

“I see the cost versus quality as the frontier, and the models that actually just need to be trained on specific data, but actually the robustness of the model is there,” said Lisa Dolan, managing director of Link Ventures (I am also affiliated with Link.)

“I think there’s still a lot of headroom for growth on the performance side of things,” said Vedant Agrawal, VP of Premji Invest.

Agrawal also talked about the value of using non-proprietary base models.

“We can take base models that other people have trained, and then make them a lot better,” he said. “So we’re really focused on all the all the components that make up these systems, and how do we (work with) them within their little categories?”

Benchmarking and Interoperability

The panel also discussed benchmarking as a way to measure these frontier systems.

“Benchmarking is an interesting question, because it is single-handedly the best thing and the worst thing in the world of research,” he said. “I think it’s a good thing because everyone knows the goal posts and what they’re trying to work towards, and it’s a bad thing because you can easily game the system.”

How does that “gaming the system” work? Agrawal suggested that it can be hard to really use benchmarks in a concrete way.

“For someone who’s not deep in the research field, it’s very hard to look at a benchmarking table and say, ‘Okay, you scored 99.4 versus someone else scored 99.2,’” he said. “It’s very hard to contextualize what that .2% difference really means in the real world.”

“We look at the benchmarks, because we kind of have to report on them, but there’s massive benchmark fatigue, so nobody even believes it,” Dolan said.

Later, there was some talk about 10x systems, and some approaches to collecting and using data:

· Identifying contractual business data

· Using synthetic data

· Teams of annotators

When asked about the future of these systems, the panel return these three concepts:

· AI agents

· Cross-disciplinary techniques

· Non-transformer architectures

Watch the video to get the rest of the panel’s remarks about frontier builds.

What Frontier Interfaces Will Look Like

Here’s a neat little addition – interested in how we will interact with these frontier models in 10 years’ time, I put the question to ChatGPT.

Here’s some of what I got:

“You won’t ‘open’ an app—they’ll exist as ubiquitous background agents, responding to voice, gaze, emotion, or task cues … your AI knows you’re in a meeting, it reads your emotional state, hears what’s being said, and prepares a summary + next actions—before you ask.”

That combines two aspects, the mode, and the feel of what new systems are likely to be like.

This goes back to the personal approach where we start seeing these models more as colleagues and conversational partners, and less as something that stares at you from a computer screen.

In other words, the days of PC-DOS command line systems are over. Windows changed the computer interface from a single-line monochrome system, to something vibrant with colorful windows, reframing, and a tool-based desktop approach.

Frontier models are going to do even more for our sense of interface progression.

And that’s going to be big. Stay tuned.