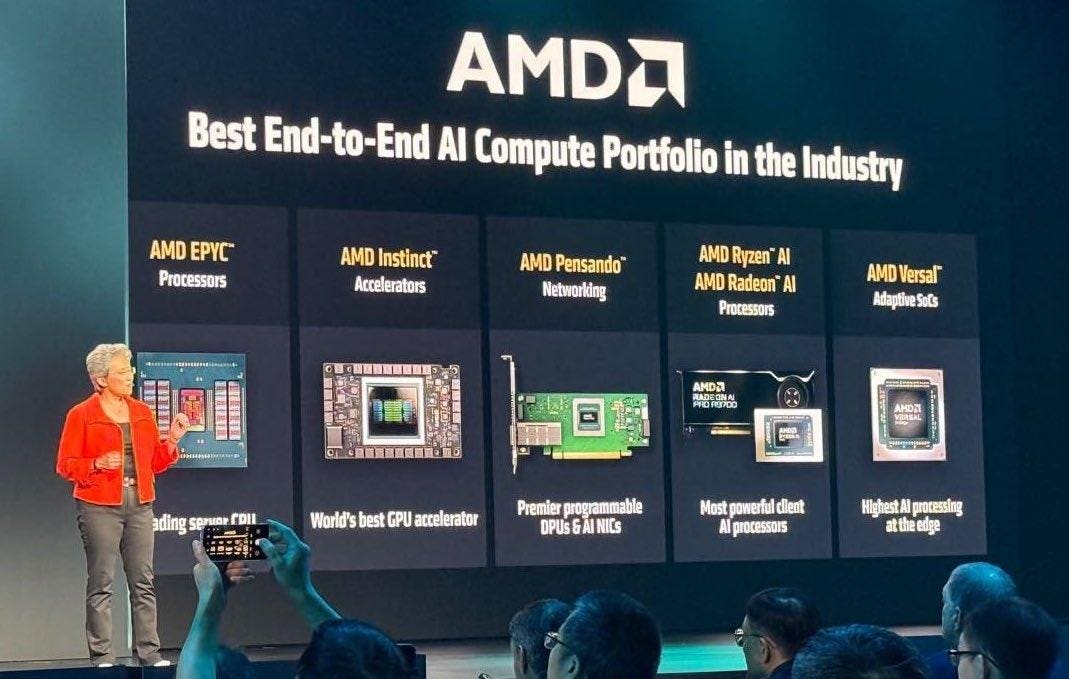

At the AMD Advancing AI event, CEO Lisa Su touted the company’s AI compute portfolio.

At the AMD Advancing AI event in San Jose earlier this month, CEO Lisa Su and her staff showcased the company’s progress across many different facets of AI. They had plenty to announce in both hardware and software, including significant performance gains for GPUs, ongoing advances in the ROCm development platform and the forthcoming introduction of rack-scale infrastructure. There were also many references to trust and strong relationships with customers and partners, which I liked, and a lot of emphasis on open hardware and an open development ecosystem, which I think is less of a clear winner for AMD, as I’ll explain later.

Overall, I think the event was important for showing how AMD is moving the ball down the field for customers and developers. Under Su, AMD’s M.O. is to have clear, ambitious plans and execute against them. Her “say/do” ratio is high. The company does what it says it will do. This is exactly what it must continue doing to whittle away at Nvidia’s dominance in the datacenter AI GPU market. What I saw at the Advancing AI event raised my confidence from last year — although there are a few gaps that need to be addressed.

(Note: AMD is an advisory client of my firm, Moor Insights & Strategy.)

AMD’s AI Market Opportunity And Full-Stack Strategy

When she took the stage, Su established the context for AMD’s announcements by describing the staggering growth that is the backdrop for today’s AI chip market. Just take a look at the chart below.

So far, AMD’s bullish projections for the growth of the AI chip market have turned out to be … More

So this segment of the chip industry is looking at a TAM of half a trillion dollars by 2028, with the whole AI accelerator market increasing at a 60% CAGR. The AI inference sub-segment — where AMD competes on better footing with Nvidia — is enjoying an 80% CAGR. People thought that the market numbers AMD cited last year were too high, but not so. This is the world we’re living in. For the record, I never doubted the TAM numbers last year.

AMD is carving out a bigger place in this world for itself. As Su pointed out, its Instinct GPUs are used by seven of the 10 largest AI companies, and they drive AI for Microsoft Office, Facebook, Zoom, Netflix, Uber, Salesforce and SAP. Its EPYC server CPUs continue to put up record market share (40% last quarter), and it has built out a full stack — partly through smart acquisitions — to support its AI ambitions. I would point in particular to the ZT Systems acquisition and the introduction of the Pensando DPU and the Pollara NIC.

GPUs are at the heart of datacenter AI, and AMD’s new MI350 series was in the spotlight at this event. Although these chips were slated to ship in Q3, Su said that production shipments had in fact started earlier in June, with partners on track to launch platforms and public cloud instances in Q3. There were cheers from the crowd when they heard that the MI350 delivers a 4x performance improvement over the prior generation. AMD says that its high-end MI355X GPU outperforms the Nvidia B200 to the tune of 1.6x memory, 2.2x compute throughput and 40% more tokens per dollar. (Testing by my company Signal65 showed that the MI355X running DeepSeek-R1 produced up to 1.5x higher throughput than the B200.)

To put it in a different perspective, a single MI355X can run a 520-billion-parameter model. And I wasn’t surprised when Su and others onstage looked ahead to even better performance — maybe 10x better — projected for the MI400 series and beyond. That puts us into the dreamland of an individual GPU running a trillion-parameter model.

By the way, AMD has not forgotten for one second that it is a CPU company. The EPYC Venice processor scheduled to hit the market in 2026 should be better at absolutely everything — 256 high-performance cores, 70% more compute performance than the current generation and so on. EPYC’s rapid gains in datacenter market share over the past few years are no accident, and at this point all the company needs to do for CPUs is hold steady on its current up-and-to-the-right trajectory. I am hopeful that Signal65 will get a crack at testing the claims the company made at the event.

This level of performance is needed in the era of agentic AI and a landscape of many competing and complementary AI models. Su predicts — and I agree — that there will be hundreds of thousands of specialized AI models in the coming years. This is specifically true for enterprises that will have smaller models focused on areas like CRM, ERP, SCM, HCM, legal, finance and so on. To support this, AMD talked at the event about its plan to sustain an annual cadence of Instinct accelerators, adding a new generation every year. Easy to say, hard to do — though, again, AMD has a high say/do ratio these days.

AMD’s 2026 Rack-Scale Platform And Current Software Advances

On the hardware side, the biggest announcement was the forthcoming Helios rack-scale GPU product that AMD plans to deliver in 2026. This is a big deal, and I want to emphasize how difficult it is to bring together high-performing CPUs (EPYC Venice), GPUs (MI400) and networking chips (next-gen Pensando Vulcano NICs) in a liquid-cooled rack. It’s also an excellent way to take on Nvidia, which makes a mint off of its own rack-scale offerings for AI. At the event, Su said she believes that Helios will be the new industry standard when it launches next year (and cited a string of specs and performance numbers to back that up). It’s good to see AMD provide a roadmap this far out, but it also had to after Nvidia did at the GTC event earlier this year.

On the software side, Vamsi Boppana, senior vice president of the Artificial Intelligence Group at AMD, started off by announcing the arrival of ROCm 7, the latest version of the company’s open source software platform for GPUs. Again, big improvements come with each generation — in this case, a 3.5x gain in inference performance compared to ROCm 6. Boppana stressed the very high cadence of updates for AMD software, with new features being released every two weeks. He also talked about the benefits of distributed inference, which allows the two steps of inference to be tasked to separate GPU pools, further speeding up the process. Finally, he announced — to a chorus of cheers — the AMD Developer Cloud, which makes AMD GPUs accessible from anywhere so developers can use them to test-drive their ideas.

Last year, Meta had kind things to say about ROCm, and I was impressed because Meta is the hardest “grader” next to Microsoft. This year, I heard companies talking about both training and inference, and again I’m impressed. (More on that below.) It was also great getting some time with Anush Elangovan, vice president for AI software at AMD, for a video I shot with him. Elangovan is very hardcore, which is exactly what AMD needs. Real grinders. Nightly code drops.

What’s Working Well For AMD in AI

So that’s (most of) what was new at AMD Advancing AI. In the next three sections, I want to talk about the good, the needs-improvement and the yet-to-be-determined aspects of what I heard during the event.

Let’s start with the good things that jumped out at me.

- Rack-scale platform announcements — By all means, we shouldn’t count any chickens before they’re hatched, and we don’t know yet how well Helios will perform in the marketplace. But I have confidence in the team’s ability to deliver on this very challenging project, and I think this could turn into a nice competitive offering that will force Nvidia to take notice in an area where it has effectively had free rein.

- ROCm progress — This is at least as exciting as the developments in rack-scale hardware, and maybe even more so — because of what the new version enables, certainly, but also for what its quality and the cadence of improvement says about AMD’s maturation in the AI field. While much of the industry’s attention over the past 2.5 years of the AI revolution has been on GPUs, in practical terms the software is as important as the hardware. In support of this observation, I’ll note that in years past, I’ve had OEM executives tell me bluntly that they selected Nvidia at key junctures because its software was so much better than any competing chip maker’s. Given AMD’s rapid rise in this area, I don’t think they could say that so easily today, at least at the CUDA layer. But if you use Nvidia models, frameworks or NIMs, that’s still a hard one to overcome.

- AI training — Last year, AMD talked plenty about training alongside inference, but its customers only talked about inference. The contrast with this event was clear: Technical leaders from Meta, Microsoft and Cohere, among others, emphasized that they’re using AMD GPUs for training, not just inference. That real-world validation is important.

- TCO and trust — Why is AMD winning business? One customer or partner after another reiterated terms like “trust” and “relationship” to talk about working with AMD, and “TCO” or “price/performance” to talk about how its products deliver. Mind you, this doesn’t mean that AMD is a pushover, or that its products are cheap; it means that the company makes itself reliable and easy to do business with, and that its products solve the problems they’re supposed to in a way that makes business sense.

- UALink — This is a narrower item than the ones above, but although my firm has covered the Ultra Accelerator Link Consortium since its launch last year, lately I’ve been wondering about UALink’s competitive position. That was especially true after Nvidia announced NVLink Fusion last month. But I’m feeling better about the vitality of UALink after hearing about it from Astera Labs CEO Jitendra Mohan onstage at the AMD event and swiveling back to talk with the XPU providers.

- New friends — I was pleasantly surprised that the guests onstage included leaders from xAI and OpenAI (no less than Sam Altman himself). While it’s too early to tell whether this will result in a big chunk of business with either company (or simply be used by the AI companies as a price lever against Nvidia), it was impressive nonetheless.

What Didn’t Work For Me At Advancing AI

While overall I thought Advancing AI was a win for AMD, there were two areas where I thought the company missed the mark — one by omission, one by commission.

- NIMs — While Nvidia’s CUDA platform is its biggest competitive differentiator in software, it’s hardly the only differentiator. Its NIM microservices make it easier for organizations to deploy foundation models in the cloud, the datacenter, wherever. And I haven’t seen anything from AMD so far that could counter Nvidia’s advantage in this area. If AMD has the goods, it needs to message and publicize them better. If it doesn’t, I think it has to step up in this area or risk competing at a disadvantage. This is especially true in the enterprise, where SAP, ServiceNow, Cloudera, Databricks, Workday and others have enterprise NIMs. I know the Linux Foundation is working on something, but it would have been great to reinforce this onstage.

- “Open” tropes — I believe that AMD is correct when it says that “open” technology — hardware, software, ecosystems — generally wins out when we look across the history of modern information technology. And it’s not hard to think of supporting examples such as Linux, PyTorch, OCP or Android. But in the specific case of commercial GPUs, openness hasn’t won during the past 20-plus years. By this point, I’ve seen this movie over and over and over. “Open” has typically been too slow as Nvidia continues to operate a lot quicker than the typical “closed” dominant vendors we’ve seen in various areas of technology over the past several decades. Does anybody remember when OpenCL was going to put Nvidia in its place? So while I like open standards and open development processes for growing markets and spreading technology advancements widely, there’s only so much it helps to bang that drum when you’re up against an 800-pound gorilla that doesn’t seem to ever get tired or ever lose its thirst to win and win quickly. If I see proof otherwise, I can change my mind.

The Jury Is Out On Some Elements Of AMD’s AI Strategy

In some areas, I suspect that AMD is doing okay or will be doing okay soon — but I’m just not sure. I can’t imagine that any of the following items has completely escaped AMD’s attention, but I would recommend that the company address them candidly so that customers know what to expect and can maintain high confidence in what AMD is delivering.

- Rack-scale ecosystem participation — As I said above, Helios sounds great. But how do OEMs and ODMs play in an environment when AMD is creating its own rack-scale solutions? Do they participate at the blueprint stage? Validated OCP designs? I’d love to know more about how AMD sees these partnerships working for rack-scale.

- Storage — Simple question: What are the best storage solutions for AMD’s rack-scale products? (Follow-up question: Any key vendors on the horizon as partners for this?) For that matter, how about data management with companies like Cloudera, Databricks, Snowflake, IBM and more?

- No OEMs on stage for GPUs — AMD listed dozens of MI350 partners, but none of the partners or customers that spoke from the stage came from OEMs. Is that coincidental? Are OEMs angry about competition for rack-scale solutions? I think AMD would be well served to give some extra TLC to any OEM-related announcements in its pipeline. Optics aren’t everything . . . but they’re not nothing, either.

- Enterprise GPUs — As I’ve discussed before, AMD has made great strides with its processors (both GPUs and CPUs) with hyperscalers. In the enterprise datacenter? Less so. Progress with enterprise GPUs seems unclear, and I’d love to see an announcement of a nice customer win or two here.

- No new hyperscalers announced — As just mentioned, hyperscalers have been very important for AMD’s success over the past few years, and any chip vendor would love to hear the glowing words that Microsoft, Meta and Oracle offered onstage at this event. But AWS and Google are MI-series holdouts so far. That said, if the CSPs plan to announce AMD adoption, they would likely prefer to do it on their own terms at their own events (e.g., AWS re:Invent toward the end of the year). Both AWS and Google have made significant investments in their own XPUs, so would they focus on AMD for inference? Ultimately, I think we will see them come on board, but I don’t know when.

What Comes Next In AMD’s AI Development

It is very difficult to engineer cutting-edge semiconductors — let alone rack-scale systems and all the attendant software — on the steady cadence that AMD is maintaining. So kudos to Su and everyone else at the company who’s making that happen. But my confidence (and Wall Street’s) would rise if AMD provided more granularity about what it’s doing, starting with datacenter GPU forecasts. Clearly, AMD doesn’t need to compete with Nvidia on every single thing to be successful. But it would be well served to fill in some of the gaps in its story to better speak to the comprehensive ecosystem it’s creating.

Having spent plenty of time working inside companies on both the OEM and semiconductor sides, I do understand the difficulties AMD faces in providing that kind of clarity. The process of landing design wins can be lumpy, and a few of the non-AMD speakers at Advancing AI mentioned that the company is engaged in the “bake-offs” that are inevitable in that process. Meanwhile, we’re left to wonder what might be holding things back, other than AMD’s institutional conservatism — the healthy reticence of engineers not to make any claims until they’re sure of the win.

That said, with Nvidia’s B200s sold out for the next year, you’d think that AMD should be able to sell every wafer it makes, right? So are AMD’s yields not good enough yet? Or are hyperscalers having their own problems scaling and deploying? Is there some other gating item? I’d love to know.

Please don’t take any of my questions the wrong way, because AMD is doing some amazing things, and I walked away from the Advancing AI event impressed with the company’s progress. At the show, Su was forthright about describing the pace of this AI revolution we’re living in — “unlike anything we’ve seen in modern computing, anything we’ve seen in our careers, and frankly, anything we’ve seen in our lifetime.” I’ll keep looking for answers to my nagging questions, and I’m eager to see how the competition between AMD and Nvidia plays out over the next two years and beyond. Meanwhile, AMD moved down the field at its event, and I look forward to seeing where it is headed.

Moor Insights & Strategy provides or has provided paid services to technology companies, like all tech industry research and analyst firms. These services include research, analysis, advising, consulting, benchmarking, acquisition matchmaking and video and speaking sponsorships. Of the companies mentioned in this article, Moor Insights & Strategy currently has (or has had) a paid business relationship with AMD, Astera Labs, AWS, Cloudera, Google, IBM, the Linux Foundation, Meta, Microsoft, Nvidia, Oracle, Salesforce, ServiceNow, SAP and Zoom.