Chipset on circuit board for semiconductor industry, 3d rendering

What if you could have conventional large language model output with 10 times to 20 times less energy consumption?

And what if you could put a powerful LLM right on your phone?

It turns out there are new design concepts powering a new generation of AI platforms that will conserve energy and unlock all sorts of new and improved functionality, along with, importantly, capabilities for edge computing.

What is Edge Computing?

Edge computing occurs when the data processing and other workloads take place close to the point of origin, in other words, an endpoint, like a piece of data collection hardware, or a user’s personal device.

Another way to describe it is that edge computing starts to reverse us back away from the cloud era, where people realized that you could house data centrally. Yes, you can have these kinds of vendor services, to relieve clients of the need to handle on-premises systems, but then you have the costs of transfer, and, typically, less control. If you can simply run operations locally on a hardware device, that creates all kinds of efficiencies, including some related to energy consumption and fighting climate change.

Enter the rise of new Liquid Foundation Models, which innovate from a traditional transformer-based LLM design, to something else.

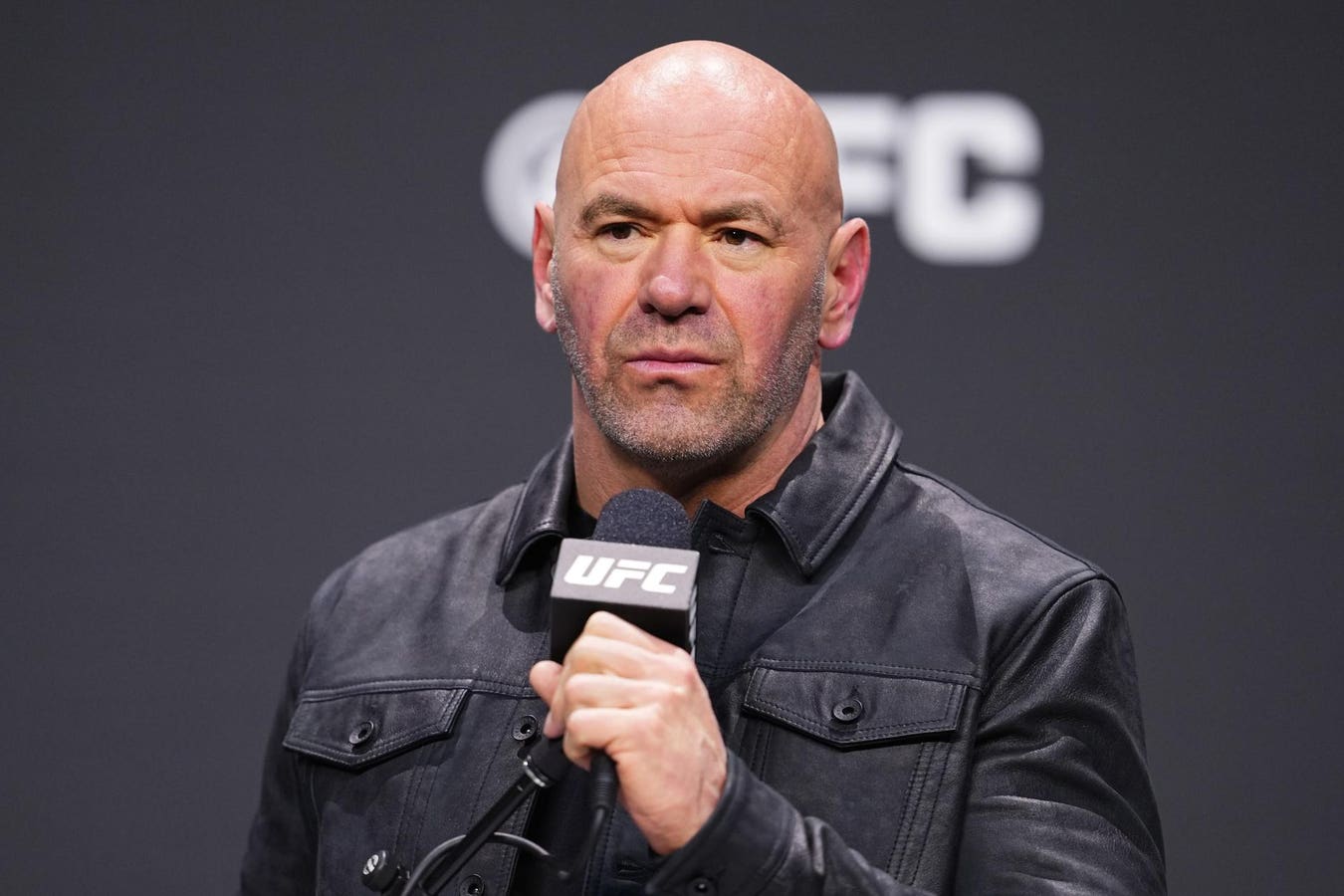

A September 2024 piece in VentureBeat by Carl Franzen covers some of the design that’s relevant here. I’ll include the usual disclaimer: I have been listed as a consultant with Liquid AI, and I know a lot of the people at the MIT CSAIL lab where this is being worked on. But don’t take my word for it; check out what Franzen has to say.

“The new LFM models already boast superior performance to other transformer-based ones of comparable size such as Meta’s Llama 3.1-8B and Microsoft’s Phi-3.5 3.8B,” he writes. “The models are engineered to be competitive not only on raw performance benchmarks but also in terms of operational efficiency, making them ideal for a variety of use cases, from enterprise-level applications specifically in the fields of financial services, biotechnology, and consumer electronics, to deployment on edge devices.”

More from a Project Leader

Then there’s this interview at IIA this April with Will Knight and Ramin Hasani, of Liquid AI.

Hasani talks about how the Liquid AI teams developed models using the brain of a worm: C elegans, to be exact.

He talked about the use of these post-transformer models on devices, cars, drones, and planes, and applications to predictive finance and predictive healthcare.

LFMs, he said, can do the job of a GPT, running locally on devices.

“They can hear, and they can talk,” he said.

More New Things

Since a recent project launch, Hasani said, Liquid AI has been having commercial discussions with big companies about how to apply this technology well to enterprise.

“People care about privacy, people care about secure applications of AI, and people care about low latency applications of AI,” he said. “These are the three places where enterprise does not get the value from the other kinds of AI companies that are out there.”

Talking about how an innovator should be a “scientist at heart,” Hasani went over some of the basic value propositions of having an LLM running offline.

Look, No Infrastructure

One of the main points that came out of this particular conversation around LFMs is that if they’re running off-line on a device, you don’t need the extended infrastructure of connected systems. You don’t need a data center or cloud services, or any of that.

In essence, these systems can be low-cost, high-performance, and that’s just one aspect of how people talk about applying a “Moore’s law” concept to AI. It means systems are getting cheaper, more versatile, and easier to manage – quickly.

So keep an eye out for this kind of development as we see smarter AI emerging.