Photo by Igor Omilaev on Unsplash

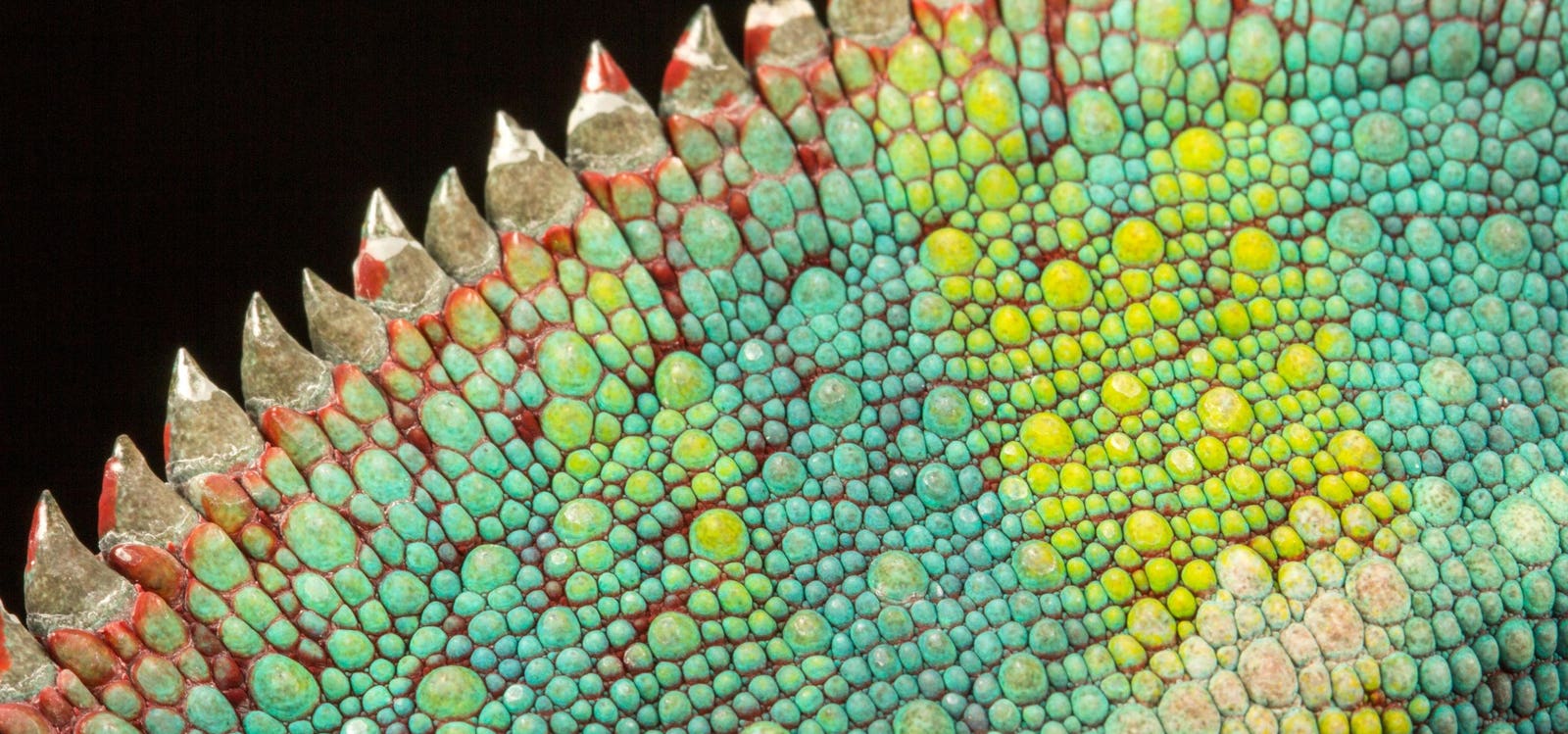

If you saw the HBO show Game of Thrones, you’re probably aware of the close but complex relationship Daenerys Targaryen had with her three dragons: Drogon, Rhaegal, and Viserion. On the show, dragons are powerful but dangerous creatures—in Daenerys’s case, two of them proved to be too uncontrollable for even her—the mother of dragons herself—to fully manage.

AI may not be able to incinerate an enemy army with a blast of flames, but even so, its awesome power reminds me quite a bit of those dragons. There’s so much that today’s technology can do, but its abilities should not be taken lightly. The AI landscape is still pitted with ethical and legal challenges, privacy concerns, unchecked biases and hallucinations. For leaders considering implementing agentic AI into their operations, these risks are essential to consider.

The truth is, not every company needs an AI agent. Thinking of building your own? Here are three things you need to evaluate.

1. Your Customers Don’t Really Need It

Businesses of all stripes have gone all in on AI, and the result has been a multitude of AI-driven products that no one needs—or wants. But jumping on the agentic AI bandwagon just to keep up with the tech-enabled Joneses can not only backfire, it can be a liability.

In fact, research published in the Journal of Hospitality Marketing & Management found that, rather than signalling advanced capabilities and features, products that advertise the use of AI can actually repel customers. “When AI is mentioned, it tends to lower emotional trust, which in turn decreases purchase intentions,” said Mesut Cicek, the study’s lead author. “We found emotional trust plays a critical role in how consumers perceive AI-powered products.”

This isn’t to say that AI isn’t transformative for businesses—79% of corporate strategists agree that AI is critical to success, according to Gartner. The key is to ensure you’re actually implementing agents in a way that will serve your customers, and not simply capitalizing on the latest buzz. Businesses should conduct market research, figure out your friction points, and listen to feedback. The last thing you want is to dump time, energy and money into an offering that never needed to exist.

2. You’re Hoping To Replace Your Human Workforce

What sets AI agents apart from LLMs is their ability to operate autonomously: For example, while an LLM can generate text responses or summaries when prompted, an AI agent can proactively schedule tasks, connect to external systems (like email or databases), and execute actions on its own—without waiting for a human request.

For organizations looking to unlock efficiency and save their human workforces from dull and repetitive tasks, agents represent an exciting opportunity. But if your goal is to eliminate every flesh-and-blood member of your team in exchange for a hyper-efficient, AI-powered workforce, you’re looking at it wrong.

While the autonomy of agentic AI is one of its features, it’s also one of its greatest risks. Their ability to act independently poses any number of threats, from accidental privacy exposure or data poisoning, which can lead to devastating consequences. As Shomit Ghose writes at UC Berkeley’s Sutardja Center for Entrepreneurship and Technology: “We might grant some lenity to an LLM-driven chatbot that hallucinates an incorrect answer, leading us to lose a bar bet. We’ll be less charitable when an LLM-driven agentic AI hallucinates a day-trading strategy in our stock portfolios.”

As a leader, your goal should be for AI agents to work alongside your team, not to replace it. The fact is, agents are good, but they’re not infallible. If an agent commits an error that doesn’t get caught until it’s too late, your organization will lose credibility that it may never recover.

3. You’re Not Paying Attention To Government Regulations And Risk Management

The rapid advance of AI agents creates unprecedented opportunities for businesses, but without proper governance, these systems can quickly become liabilities. A major challenge is that AI operates autonomously across vast datasets, learning and evolving in ways that may not always align with ethical or regulatory standards. Leaders considering implementing agentic AI should familiarize themselves with all of the potential hazards, and establish structured oversight frameworks to mitigate them.

As AI-powered decision-making becomes more integral to business operations, companies must establish clear policies around compliance, transparency, and accountability. This includes adopting governance models that align with all current regulations, which are changing rapidly.

Organizations should also integrate AI risk management frameworks to ensure ongoing monitoring and ethical deployment.

AI agents, like Daenerys’s dragons, hold immense power. But without careful and deliberate strategy, they can quickly become more of a liability than an asset. Instead of rushing to adopt AI for the sake of staying on trend, businesses must take a measured approach, ensuring their agents serve real needs, support human expertise, and adhere to evolving regulations.