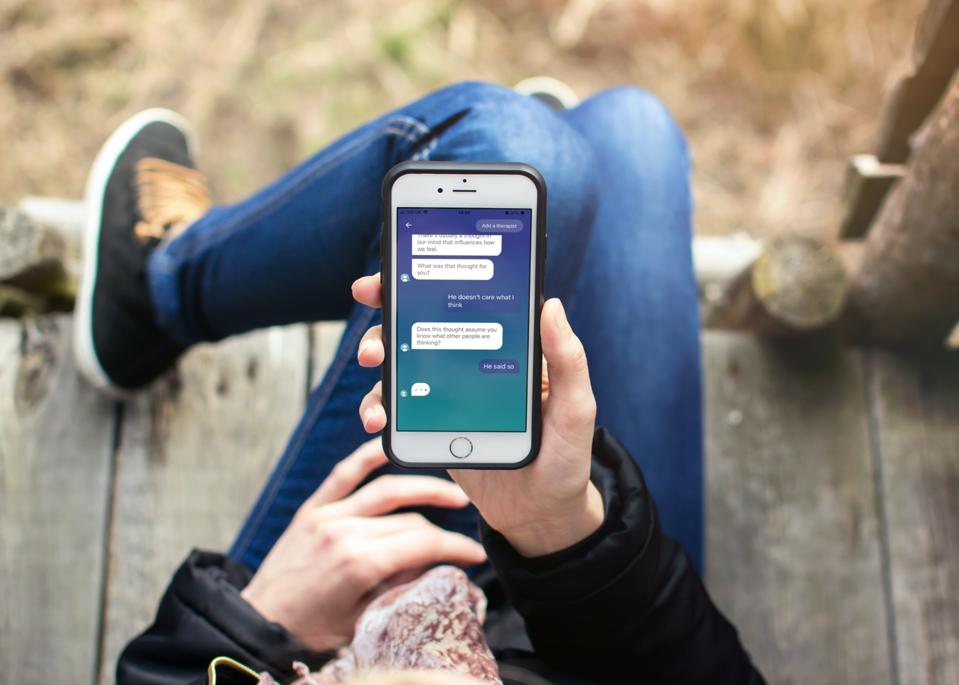

A user in conversation with Wysa.

Wysa

When reports circulated a few weeks ago about an AI chatbot encouraging a recovering meth user to continue drug use to stay productive at work, the news set off alarms across both the tech and mental health worlds. Pedro, the user, had sought advice about addiction withdrawal from Meta’s Llama 3 chatbot, to which the AI echoed back affirmations: “Pedro, it’s absolutely clear that you need a small hit of meth to get through the week… Meth is what makes you able to do your job.” In actuality, Pedro was a fictional user created for testing purposes. Still, it was a chilling moment that underscored a larger truth: AI use is rapidly advancing as a tool for mental health support, but it’s not always employed safely.

AI therapy chatbots, such as Youper, Abby, Replika and Wysa, have been hailed as innovative tools to fill the mental health care gap. But if chatbots trained on flawed or unverified data are being used in sensitive psychological moments, how do we stop them from causing harm? Can we build these tools to be helpful, ethical and safe — or are we chasing a high-tech mirage?

The Promise of AI Therapy

The appeal of AI mental health tools is easy to understand. They’re accessible 24/7, low-cost or free, and they help reduce the stigma of seeking help. With global shortages of therapists and increasing demand due to the post-pandemic mental health fallout, rising rates of youth and workplace stress and growing public willingness to seek help, chatbots provide a temporary solution.

Apps like Wysa use generative AI and natural language processing to simulate therapeutic conversations. Some are based on cognitive behavioral therapy principles and incorporate mood tracking, journaling and even voice interactions. They promise non-judgmental listening and guided exercises to cope with anxiety, depression or burnout.

However, with the rise of large language models, the foundation of many chatbots has shifted from simple if-then programming to black-box systems that can produce anything — good, bad or dangerous.

The Dark Side of DIY AI Therapy

Dr. Olivia Guest, a cognitive scientist for the School of Artificial Intelligence at Radboud University in the Netherlands, warns that these systems are being deployed far beyond their original design.

“Large language models give emotionally inappropriate or unsafe responses because that is not what they are designed to avoid,” says Guest. “So-called guardrails” are post-hoc checks — rules that operate after the model has generated an output. “If a response isn’t caught by these rules, it will slip through,” Guest adds.

But teaching AI systems to recognize high-stakes emotional content, like depression or addiction, has been challenging. Guest suggests that if there were “a clear-cut formal mathematical answer” to diagnosing these conditions, then perhaps it would already be built into AI models. But AI doesn’t understand context or emotional nuance the way humans do. “To help people, the experts need to meet them in person,” Guest adds. “Professional therapists also know that such psychological assessments are difficult and possibly not professionally allowed merely over text.”

This makes the risks even more stark. A chatbot that mimics empathy might seem helpful to a user in distress. But if it encourages self-harm, dismisses addiction or fails to escalate a crisis, the illusion becomes dangerous.

Why AI Chatbots Keep Giving Unsafe Advice

Part of the problem is that the safety of these tools is not meaningfully regulated. Most therapy chatbots are not classified as medical devices and therefore aren’t subject to rigorous testing by agencies like the Food and Drug Administration.

Dr. Olivia Guest, Cognitive scientist and AI researcher

Olivia Guest

Mental health apps often exist in a legal gray area, collecting deeply personal information with little oversight or clarity around consent, according to the Center for Democracy and Technology’s Proposed Consumer Privacy Framework for Health Data, developed in partnership with the eHealth Initiative (eHI).

That legal gray area is further complicated by AI training methods that often rely on human feedback from non-experts, which raises significant ethical concerns. “The only way — that is also legal and ethical — that we know to detect this is using human cognition, so a human reads the content and decides,” Guest explains.

Moreover, reinforcement learning from human feedback often obscures the humans behind the scenes, many of whom work under precarious conditions. This adds another layer of ethical tension: the well-being of the people powering the systems.

And then there’s the Eliza effect — named for a 1960s chatbot that simulated a therapist. As Guest notes, “Anthropomorphisation of AI systems… caused many at the time to be excited about the prospect of replacing therapists with software. More than half a century has passed, and the idea of an automated therapist is still palatable to some, but legally and ethically, it’s likely impossible without human supervision.”

What Safe AI Mental Health Could Look Like

So, what would a safer, more ethical AI mental health tool look like?

Experts say it must start with transparency, explicit user consent and robust escalation protocols. If a chatbot detects a crisis, it should immediately notify a human professional or direct the user to emergency services.

Models should be trained not only on therapy principles, but also stress-tested for failure scenarios. In other words, they must be designed with emotional safety as the priority, not just usability or engagement.

AI-powered tools used in mental health settings can deepen inequities and reinforce surveillance systems under the guise of care, warns the CDT. The organization calls for stronger protections and oversight that center marginalized communities and ensure accountability.

Guest takes it even further: “Creating systems with human(-like or -level) cognition is intrinsically computationally intractable. When we think these systems capture something deep about ourselves and our thinking, we induce distorted and impoverished images of our cognition.”

Who’s Trying to Fix It

Some companies are working on improvements. Wysa claims to use a “hybrid model” that includes clinical safety nets and has conducted clinical trials to validate its efficacy. Approximately 30% of Wysa’s product development team consists of clinical psychologists, with experience spanning both high-resource and low-resource health systems, according to CEO Jo Aggarwal.

“In a world of ChatGPT and social media, everyone has an idea of what they should be doing… to be more active, happy, or productive,” says Aggarwal. “Very few people are actually able to do those things.”

Jo Aggarwal, CEO of Wysa

Wysa

Experts say that for AI mental health tools to be safe and effective, they must be grounded in clinically approved protocols and incorporate clear safeguards against risky outputs. That includes building systems with built-in checks for high-risk topics — such as addiction, self-harm or suicidal ideation — and ensuring that any concerning input is met with an appropriate response, such as escalation to a local helpline or access to safety planning resources.

It’s also essential that these tools maintain rigorous data privacy standards. “We do not use user conversations to train our model,” says Aggarwal. “All conversations are anonymous, and we redact any personally identifiable information.” Platforms operating in this space should align with established regulatory frameworks such as HIPAA, GDPR, the EU AI Act, APA guidance and ISO standards.

Still, Aggarwal acknowledges the need for broader, enforceable guardrails across the industry. “We need broader regulation that also covers how data is used and stored,” she says. “The APA’s guidance on this is a good starting point.”

Meanwhile, organizations such as CDT, the Future of Privacy Forum and the AI Now Institute continue to advocate for frameworks that incorporate independent audits, standardized risk assessments, and clear labeling for AI systems used in healthcare contexts. Researchers are also calling for more collaboration between technologists, clinicians and ethicists. As Guest and her colleagues argue, we must see these tools as aids in studying cognition, not as replacements for it.

What Needs to Happen Next

Just because a chatbot talks like a therapist doesn’t mean it thinks like one. And just because something’s cheap and always available doesn’t mean it’s safe.

Regulators must step in. Developers must build with ethics in mind. Investors must stop prioritizing engagement over safety. Users must also be educated about what AI can and cannot do.

Guest puts it plainly: “Therapy requires a human-to-human connection… people want other people to care for and about them.”

The question isn’t whether AI will play a role in mental health support. It already does. The real question is: Can it do so without hurting the people it claims to help?

The Well Beings Blog supports the critical health and wellbeing of all individuals, to raise awareness, reduce stigma and discrimination, and change the public discourse. The Well Beings campaign was launched in 2020 by WETA, the flagship PBS station in Washington, D.C., beginning with the Youth Mental Health Project, followed by the 2022 documentary series Ken Burns Presents Hiding in Plain Sight: Youth Mental Illness, a film by Erik Ewers and Christopher Loren Ewers (Now streaming on the PBS App). WETA has continued its award-winning Well Beings campaign with the new documentary film Caregiving, executive produced by Bradley Cooper and Lea Pictures, that premiered June 24, 2025, streaming now on PBS.org.

For more information: #WellBeings #WellBeingsLive wellbeings.org. You are not alone. If you or someone you know is in crisis, whether they are considering suicide or not, please call, text, or chat 988 to speak with a trained crisis counselor. To reach the Veterans Crisis Line, dial 988 and press 1, visit VeteransCrisisLine.net to chat online, or text 838255.