How will generative AI be monetized in healthcare? Two competing visions are emerging.

getty

Generative AI is advancing faster than any technology in modern memory.

When OpenAI released ChatGPT in late 2022, few in the medical community took notice. Most doctors saw it as a novelty, perhaps useful for administrative tasks or as a basic reference tool, but too unreliable for clinical care.

In just a few years, that perception has shifted. Today’s GenAI tools from companies like Google, Microsoft and Nvidia outperform most physicians on national medical exams and clinical challenges. Although none are currently recommended for patient use without physician oversight, that restriction is likely to be lifted soon. GenAI capabilities continue to double annually, and OpenAI’s GPT-5 model is expected to launch within days.

That raises a pressing question: How will generative AI be monetized in healthcare?

Two competing visions are emerging. The first follows a familiar playbook: tech companies developing new FDA-approved tools for diagnosis and treatment. The second is clinician-led and would allow patients to use readily available, inexpensive large language models to manage their chronic diseases and assess new symptoms.

To understand the advantages of each approach, it’s first helpful to examine how different generative AI tools are from the AI applications used in medicine today.

The Current Standard: Narrow AI

Healthcare’s traditional AI tools rely on technologies developed more than 25 years ago. Known as “narrow AI,” these models are trained on large datasets to solve specific, well-defined problems.

These applications are developed by training AI models to compare two datasets, detect dozens of subtle differences between them and assign a probability factor to each. For reliable results, the training data must be objective, accurate and replicable. That’s why nearly all narrow AI tools are applied in visual specialties like radiology, pathology and ophthalmology — not cognitive fields that depend on subjective entries in the electronic medical record. Although these tools outperform clinicians, they are “narrow” and can be used only for the specific task they were designed to complete.

Take mammography, for example. Early-stage breast cancer can mimic benign conditions such as fibrocystic disease, causing radiologists to disagree when interpreting the same image. A narrow AI model trained on 10,000 mammograms (half from patients with confirmed cancer and half without) can detect far more differences than the human eye, resulting in 10-20% greater diagnostic accuracy than doctors.

To evaluate narrow AI tools, the FDA assesses both the accuracy of the training data and the consistency of the tool’s performance, similar to how it approves new drugs. This approach, however, doesn’t work for generative AI. The development process and resulting technology are fundamentally different.

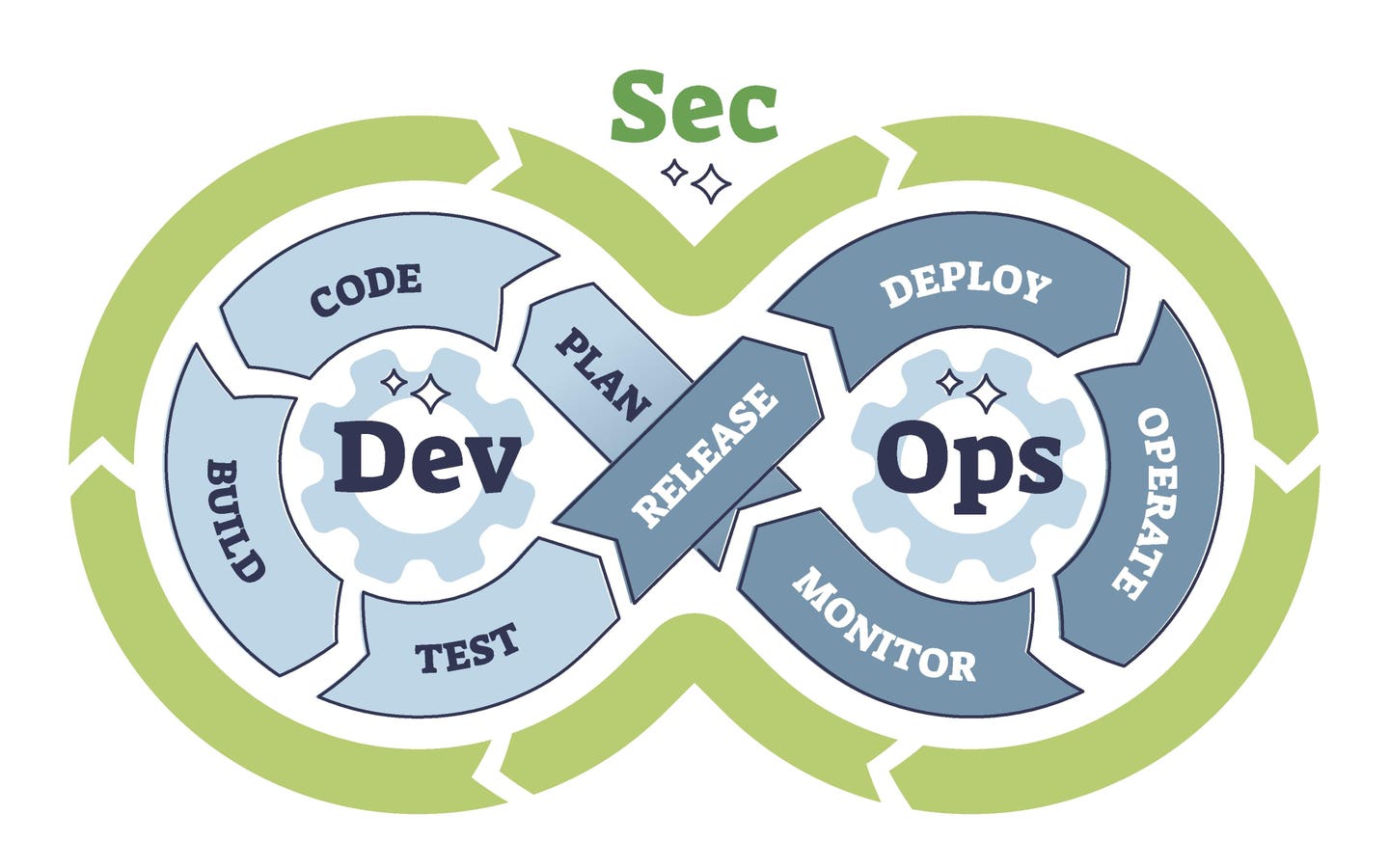

The New Opportunity: Generative AI

Today’s large language models (LLMs) like ChatGPT, Claude and Gemini are trained on the near-totality of internet-accessible content, including thousands of medical textbooks and academic journals.

This broad, in-depth training enables LLMs to respond to virtually any medical question. But unlike narrow AI, their answers are highly dependent on how users frame their questions, prompt the model and follow up for clarification. That user dependency makes traditional FDA validation, which relies on consistent and repeatable responses, effectively impossible.

However, the multimodal nature of generative AI (working across writing, audio, images and video) opens a wide range of opportunities for clinicians.

For example, I recently spoke with a surgeon who used ChatGPT to interpret a post-operative chest X-ray. The AI not only provided an accurate assessment, but it did so immediately, a full 12 hours before the radiologist issued a formal report. Had there been a serious problem that the surgeon failed to recognize, the LLM would have proven lifesaving. Even without formal approval, physicians and patients increasingly consult GenAI for medical expertise.

While there’s still debate over whether LLMs are ready for independent clinical use by patients, the pace of progress is breathtaking.

Microsoft recently reported that its Diagnostic Orchestrator tool achieved 85.5% accuracy across 304 New England Journal of Medicine case studies, compared to only 20% for physicians.

How Will Generative AI Be Monetized In Medicine?

As generative AI becomes more powerful each year, two distinct approaches are emerging for how it might be monetized in healthcare.

Each model has trade-offs:

1. Entrepreneurial Tech Model

Medical care often fails to deliver optimal outcomes at a price patients can afford. This monetization model would seek to close those gaps.

Take diabetes, where fewer than half of patients achieve adequate disease control. The result: hundreds of thousands of preventable and costly heart attacks, kidney failure and limb amputations each year. In other cases, patients struggle to access timely, affordable advice for new symptoms or for managing their multiple chronic conditions

One way to solve these problems would be through GenAI tools built by entrepreneurial companies. Venture funding would allow startups to apply a process called distillation to extract domain-specific knowledge from open-source foundation models like DeepSeek or Meta’s LLaMA.

They would then refine these models, training them on radiologist interpretations of thousands of X-rays, transcripts from patient advice centers or anonymized recordings of conversations with patients. The result: specialized generative AI tools designed to address specific gaps in care delivery.

Developing these tools will be expensive, both in terms of model training and the FDA approval process. Companies would also face legal liability for adverse outcomes. Still, if successful, these tools would likely command high prices and deliver substantial profits.

2. Clinician-Led GenAI Education

Although less profitable than an FDA-approved device or bot, the second path to GenAI monetization would prove easier to develop, much less expensive and far more transformative.

Rather than developing new software, this approach empowers patients to use existing large language models (ChatGPT, Gemini or Claude) to access similar expertise. In this model, clinicians, educators or national specialty societies — not private companies — would take the lead and share in the benefits.

They would create low-cost instructional materials for various patient-learning preferences ranging from digital guides and printed pamphlets to YouTube videos and short training courses. These resources would teach patients how to use any of the publicly available large language models safely and effectively.

Using these educational tools, patients would learn how to enter blood glucose readings from home monitors or blood pressure measurements from electronic cuffs. The large language model would then assess their clinical progress and suggest whether medication adjustments might be needed. Patients could also ask questions about new symptoms, receive likely diagnoses and learn when to seek immediate medical care.

In conjunction with their physician, they could use GenAI’s image and video capabilities to identify signs of wound infections and alert their surgeons. Those individuals with chronic heart failure could monitor their condition more closely and catch signs of decompensation early — allowing cardiologists to intervene before hospitalization becomes necessary. A GI specialist could identify complex intestinal conditions using daily patient-reported inputs, or a neurologist could diagnose ALS by analyzing videos of a patient’s gait.

Unlike startup models that require tens of millions in funding and FDA approval, these educational tools could be developed and deployed quickly by doctors and other clinicians. Because they teach patients how to use existing tools rather than offer direct medical advice, they would avoid many regulatory burdens and face reduced legal liability. And with 40% of physicians already working part-time or in gig roles, there are hundreds, or likely thousands, of experts ready to contribute.

And since generative AI tools can provide information in dozens of languages and literacy levels, they would offer unprecedented accessibility.

Which Path Will We Take?

The two models aren’t mutually exclusive, and both will likely shape the future of medicine.

Given the potential for massive financial return from the first approach, we can assume that dozens of entrepreneurs are already developing disease-focused generative AI tools.

But rather than waiting for technology companies to introduce GenAI tools, physicians working alone or in conjunction with educational companies have the opportunity to drive the process and improve our nation’s health.