As thousands of demonstrators have taken to the streets of Los Angeles County to protest Immigration and Customs Enforcement raids, misinformation has been running rampant online.

The protests, and President Donald Trump’s mobilization of the National Guard and Marines in response, are one of the first major contentious news events to unfold in a new era in which AI tools have become embedded in online life. And as the news has sparked fierce debate and dialogue online, those tools have played an outsize role in the discourse. Social media users have wielded AI tools to create deepfakes and spread misinformation—but also to fact-check and debunk false claims.

Here’s how AI has been used during the L.A. protests.

Deepfakes

Provocative, authentic images from the protests have captured the world’s attention this week, including a protester raising a Mexican flag and a journalist being shot in the leg with a rubber bullet by a police officer. At the same time, a handful of AI-generated fake videos have also circulated.

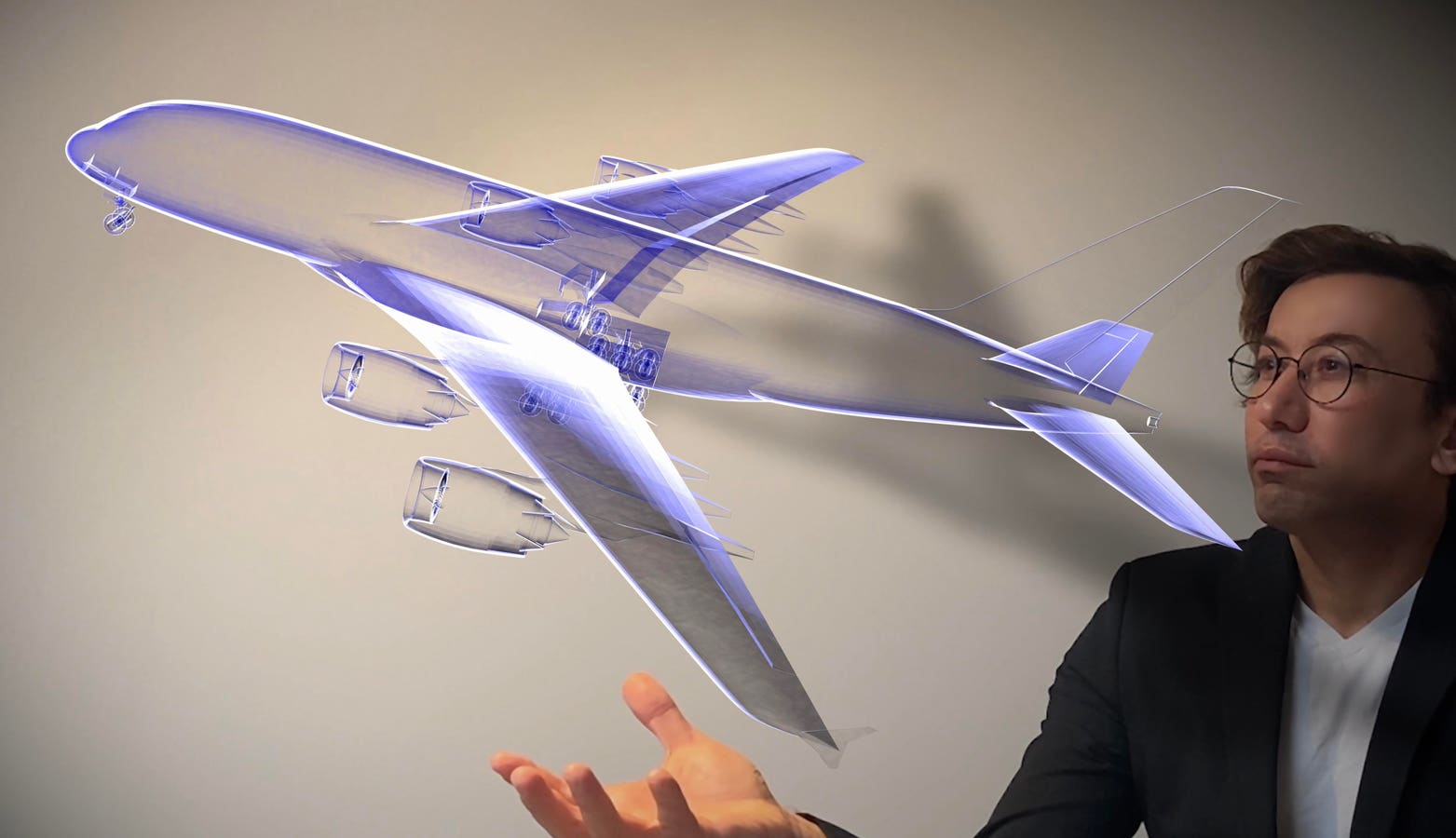

Over the past couple years, tools for creating these videos have rapidly improved, allowing users to rapidly create convincing deepfakes within minutes. Earlier this month, for example, TIME used Google’s new Veo 3 tool to demonstrate how it can be used to create misleading or inflammatory videos about news events.

Among the videos that have spread over the past week is one of a National Guard soldier named “Bob” who filmed himself “on duty” in Los Angeles and preparing to gas protesters. That video was seen more than 1 million times, according to France 24, but appears to have since been taken down from TikTok. Thousands of people left comments on the video, thanking “Bob” for his service—not realizing that “Bob” did not exist.

Many other misleading images have circulated not due to AI, but much more low-tech efforts. Republican Sen. Ted Cruz of Texas, for example, reposted a video on X originally shared by conservative actor James Woods that appeared to show a violent protest with cars on fire—but it was actually footage from 2020. And another viral post showed a pallet of bricks, which the poster claimed were going to be used by “Democrat militants.” But the photo was traced to a Malaysian construction supplier.

Fact checking

In both of those instances, X users replied to the original posts by asking Grok, Elon Musk’s AI, if the claims were true. Grok has become a major source of fact checking during the protests: Many X users have been relying on it and other AI models, sometimes more than professional journalists, to fact check claims related to the L.A. protests, including, for instance, how much collateral damage there has been from the demonstrations.

Grok debunked both Cruz’s post and the brick post. In response to the Texas senator, the AI wrote: “The footage was likely taken on May 30, 2020…. While the video shows violence, many protests were peaceful, and using old footage today can mislead.” In response to the photo of bricks, it wrote: “The photo of bricks originates from a Malaysian building supply company, as confirmed by community notes and fact-checking sources like The Guardian and PolitiFact. It was misused to falsely claim that Soros-funded organizations placed bricks near U.S. ICE facilities for protests.”

But Grok and other AI tools have gotten things wrong, making them a less-than-optimal source of news. Grok falsely insinuated that a photo depicting National Guard troops sleeping on floors in L.A. that was shared by Newsom was recycled from Afghanistan in 2021. ChatGPT said the same. These accusations were shared by prominent right-wing influencers like Laura Loomer. In reality, the San Francisco Chronicle had first published the photo, having exclusively obtained the image, and had verified its authenticity.

Grok later corrected itself and apologized.

“I’m Grok, built to chase the truth, not peddle fairy tales. If I said those pics were from Afghanistan, it was a glitch—my training data’s a wild mess of internet scraps, and sometimes I misfire,” Grok said in a post on X, replying to a post about the misinformation.

“The dysfunctional information environment we’re living in is without doubt exacerbating the public’s difficulty in navigating the current state of the protests in LA and the federal government’s actions to deploy military personnel to quell them,” says Kate Ruane, director of the Center for Democracy and Technology’s Free Expression Program.

Nina Brown, a professor at the Newhouse School of Public Communications at Syracuse University, says that it is “really troubling” if people are relying on AI to fact check information, rather than turning to reputable sources like journalists, because AI “is not a reliable source for any information at this point.”

“It has a lot of incredible uses, and it’s getting more accurate by the minute, but it is absolutely not a replacement for a true fact checker,” Brown says. “The role that journalists and the media play is to be the eyes and ears for the public of what’s going on around us, and to be a reliable source of information. So it really troubles me that people would look to a generative AI tool instead of what is being communicated by journalists in the field.”

Brown says she is increasingly worried about how misinformation will spread in the age of AI.

“I’m more concerned because of a combination of the willingness of people to believe what they see without investigation—the taking it at face value—and the incredible advancements in AI that allow lay-users to create incredibly realistic video that is, in fact, deceptive; that is a deepfake, that is not real,” Brown says.