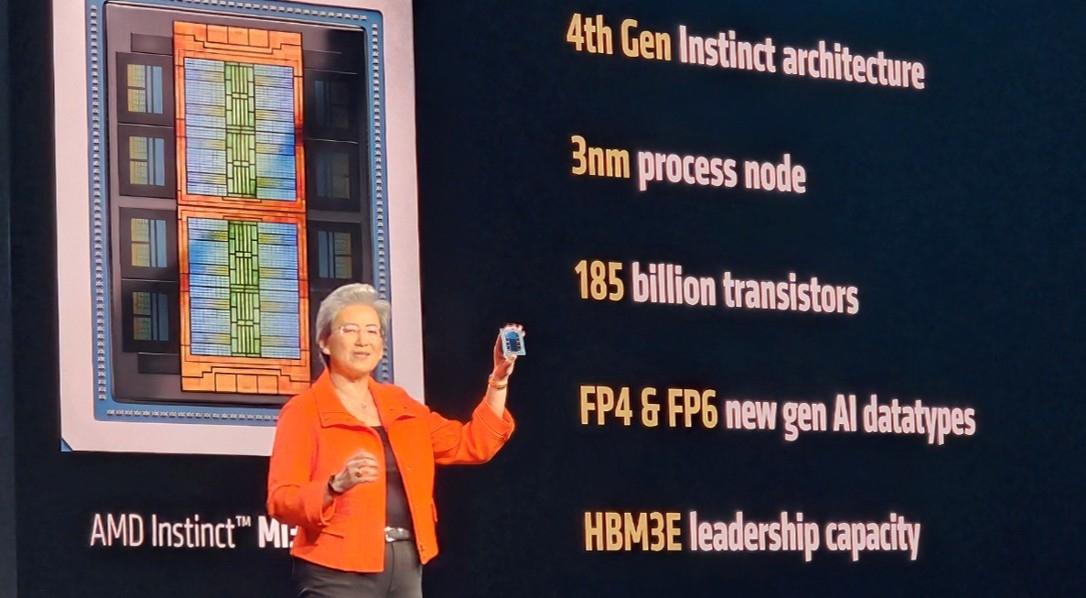

AMD CEO Dr. Lisa Su announces the new Instinct MI350 series GPU accelerator.

Today, AMD held its Advancing AI event in San Jose, California. This year’s event centered around the launch of the new Instinct MI350 series GPU accelerators for servers, advances to the company’s ROCm (Radeon Open Compute) software development platform for the Instinct accelerators, and AMD’s data center system roadmap.

Disclosure: My company, Tirias Research, has consulted for AMD and other companies mentioned in this article.

The Instinct MI350 & MI355X GPU accelerator specs

First up is the latest in the Instinct product line, the MI350 and MI355X. Like its main competitor in the AI segment, AMD has committed to an annual cadence for new server AI accelerators. The MI350 and MI355X are the latest and are based on the new CDNA 4 architecture. The MI350 is a passively cooled solution that utilizes heat sinks and fans, whereas the MI355X is a liquid-cooled solution that employs direct-to-chip cooling. The liquid cooling system provides two significant benefits: the first is an increase in Total Board Power (TBP) from 1000W to 1400W, and the second is an increase in rack density from 64 GPUs per rack to up to 128 GPUs per rack.

According to AMD, the MI350 series of GPU accelerators provides approximately a 3x improvement in both AI training and inference over the previous MI300 generation, with competitive performance equal to or better than the competition on select AI models and workloads. (Tirias Research does not provide competitive benchmark information unless it can verify it).

Structurally, the MI350 series is similar to the previous MI300 generation, utilizing 3D hybrid bonding to stack an Infinity Fabric die, two I/O dies, and eight compute dies on top of a silicon interposer. The most significant changes are the shift to the CDNA 4 compute architecture, the use of the latest HBM3E high-speed memory, and architectural enhancements to the I/O, which resulted in two dies rather than four. The various dies are manufactured on TSMC’s N3 and N6 process nodes. The result is an increase in performance efficiency throughout the chip while maintaining a small footprint.

New ROCm 7 features

The Second significant announcement, or group of announcements, is around ROCm, AMD’s open-source software development platform for GPUs. The release of ROCm 7 demonstrates just how far the software platform has come. One of the most significant changes is the ability to run PyTorch natively on Windows on an AMD-enabled PC, a huge plus for developers, and making ROCm truly portable across all AMD platforms. ROCm now supports all major AI frameworks and models, including 1.8 million models on Hugging Face. ROCm 7 also provides an average of 3 times better training performance than ROCm 6 on leading industry models and 3.5 times higher inference performance. In addition the enhancements to ROCm, AMD is doing more outreach to developers, including a developer track at the Advancing AI event, and the availability of the new AMD Developer Cloud accessed through GitHub.

Helios AI Rack

The third major announcement was the forthcoming rack architecture, scheduled for 2026, called Helios. Like the rest of the industry, AMD is shifting its system focus to the rack as the platform, rather than just the server tray. The Helios will be a new rack architecture based on the latest AMD technology for processing, AI, and networking. Helios will feature the Zen 6 Epyc processor, the Instinct MI400 GPU accelerator based on the CDNA Next architecture, and the Pensando Vulcano AI NIC for scale-out networking. For scale-up networking between GPU accelerators within a rack, Helios will leverage UALink. The UALink 1.0 specification was released in April. Marvell and Synopsys have both announced the availability of UALink IP, and switch chips are anticipated from several vendors, including UALink partners like Astera Labs and Cisco.

Additionally, an A-list of partners and customers joined AMD at Advancing AI, including Astera Labs, Cohere, Humain, Meta, Marvell, Microsoft, OpenAI, Oracle, Red Hat, and xAI. Humain was the most interesting because of its joint venture with AMD and other silicon vendors to build an AI infrastructure in Saudi Arabia. Humain has already begun the construction of eleven data centers with plans to add 50MW modules every quarter. Key to Humain’s strategy is leveraging the abundant power and young labor force in Saudi Arabia.

There is much more detail behind these and the extensive list of partnership announcements, but these three underscore AMD’s dedication to remaining competitive in data center AI solutions, demonstrate its consistent execution, and reinforce its position as a viable alternative provider of data center GPU accelerators and AI platforms. As the tech industry struggles to meet the demand for AI, AMD continues to enhance its server platforms to meet the needs of AI developers and workloads. While this does not leapfrog the competition, it does narrow the gap in many respects, making AMD the most competitive alternative to Nvidia.