Welcome back to In the Loop, TIME’s new twice-weekly newsletter about the world of AI.

If you’re reading this in your browser, you can subscribe to have the next one delivered straight to your inbox.

What to Know: The future of ‘sweatshop data’

You can measure time in the world of AI by the cadence of new essays with provocative titles. Another one arrived earlier this month from the team at Mechanize Work: a new startup that is trying to, er, automate all human labor. Its title? “Sweatshop data is over.”

This one caught my attention. As regular readers may know, I’ve done a lot of reporting over the years on the origins of the data that is used to train AI systems. My story “Inside Facebook’s African Sweatshop” was the first to reveal how Meta used contractors in Kenya, some earning as little as $1.50 per hour, to remove content from their platforms—content that would later be used in attempts to train AI systems to do that job automatically. I also broke the news that OpenAI used workers from the same outsourcing company to detoxify ChatGPT. In both cases, workers said the labor left them with diagnoses of post-traumatic stress disorder. So if sweatshop data really is a thing of the past, that would be a very big deal indeed.

What the essay argues — Mechanize Work’s essay points to a very real trend in AI research. To summarize: AI systems used to be relatively unintelligent. To teach them the difference between, say, a cat and a dog, you’d need to give them lots of different labeled examples of cats and dogs. The most cost-effective way to get those labels was from the Global South, where labor is cheap. But as AI systems have gotten smarter, they no longer need to be told basic information, the authors argue. AI companies are now desperately seeking expert data, which necessarily comes from people with PhDs—and who won’t put up with poverty wages. “Teaching AIs these new capabilities will require the dedicated efforts of high-skill specialists working full-time, not low-skill contractors working at scale,” the authors argue.

A new AI paradigm — The authors are, in one important sense, correct. The big money has indeed moved toward expert data. A clutch of companies, including Mechanize Work, are jostling to be the ones to dominate the space, which could eventually be worth hundreds of billions of dollars, according to insiders. Many of them aren’t just hiring experts, but are also building dedicated software environments to help AI learn from experience at scale, in a paradigm known as reinforcement learning with verifiable rewards. It takes inspiration from DeepMind’s 2017 model AlphaZero, which didn’t need to observe humans playing chess or Go, and instead became superhuman just by playing against itself millions of times. In the same vein, these companies are trying to build software that would allow AI to “self-play,” with the help of experts, on questions of coding, science, and math. If they can get that to work, it could potentially unlock major new leaps in capability, top researchers believe.

There’s just one problem — While all of this is true, it does not mean that sweatshop data has gone away. “We don’t observe the workforce of data workers, in the classical sense, decreasing,” says Milagros Miceli, a researcher at the Weizenbaum Institute in Berlin who studies so-called sweatshop data. “Quite the opposite.”

Meta and TikTok, for example, still rely on thousands of contractors all over the world to remove harmful content from their systems—a task that has stubbornly resisted full AI automation. Other types of low-paid tasks, typically carried out in places like Kenya, the Philippines, and India, are booming.

“Right now what we are seeing is a lot of what we call algorithmic verification: people checking in on existing AI models to ensure that they are functioning according to plan,” Miceli says. “The funny thing is, it’s the same workers. If you talk to people, they will tell you: I have done content moderation. I have done data labeling. Now I am doing this.”

Who to Know: Shengjia Zhao, Chief Scientist, Meta Superintelligence Labs

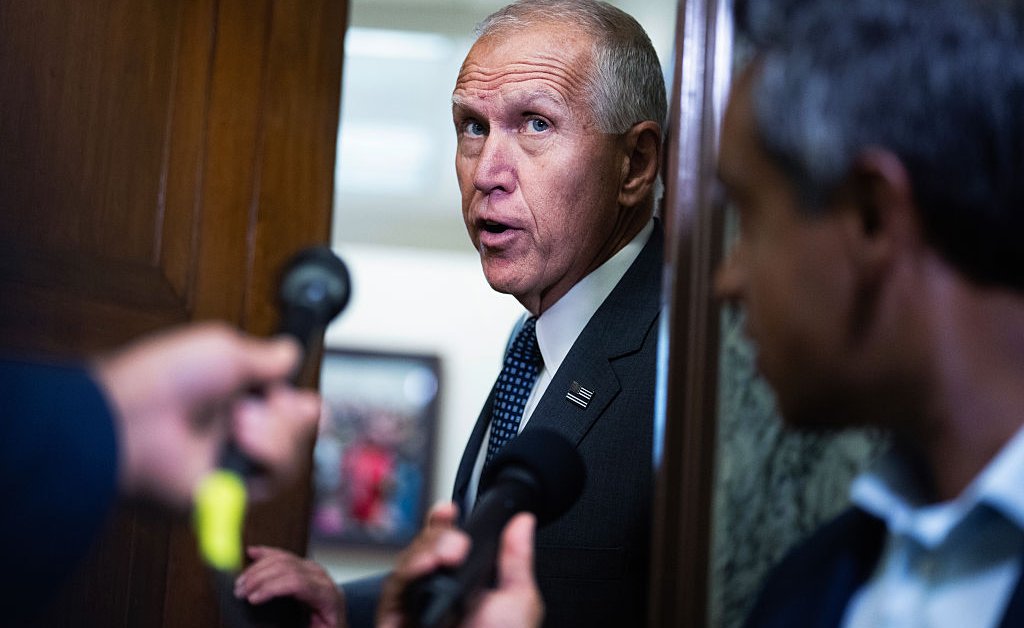

Mark Zuckerberg promoted AI researcher Shengjia Zhao to chief scientist of the new effort inside Meta to create “superintelligence.” Zhao joined Meta last month from OpenAI, where he worked on the o1-mini and o3-mini models.

Zuck’s memo — In a note to staff on Saturday, Zuckerberg wrote: “Shengjia has already pioneered several breakthroughs including a new scaling paradigm and distinguished himself as a leader in the field.” Zhao, who studied for his undergraduate degree in Beijing and graduated from Stanford with a PhD in 2022, “will set the research agenda and scientific direction for our new lab,” Zuckerberg wrote.

Meta’s recruiting push — Zuckerberg has ignited a fierce war for talent in the AI industry by offering top AI researchers pay packages worth up to $300 million, according to reports. “I’ve lost track of how many people from here they’ve tried to get,” Sam Altman told OpenAI staff in a Slack message, according to the Wall Street Journal.

Bad news for LeCun — Zhao’s promotion is yet another sign that Yann LeCun—who until the hiring blitz this year was Meta’s most senior AI scientist—has been put out to pasture. A notable critic of the idea that LLMs will scale to superintelligence, LeCun’s views appear to be increasingly at odds with Zuckerberg’s bullishness. Meta’s Superintelligence team is clearly now a higher priority for Zuckerberg than the separate group LeCun runs, called Facebook AI Research (FAIR). In a note appended to his announcement of Zhao’s promotion on Threads, Zuckerberg denied that LeCun had been sidelined. “To avoid any confusion, there’s no change in Yann’s role,” he wrote. “He will continue to be Chief Scientist for FAIR.”

AI in Action

One of the big ways AI is already affecting our world is in the changes it’s bringing to our information ecosystem. News publishers have long complained that Google’s “AI Overviews” in its search results have reduced traffic, and therefore revenues, harming their ability to employ journalists and hold the powerful to account. Now we have new data from the Pew Research Center that puts that complaint into stark relief.

When AI summaries are included in search results, only 8% of users click through to a link — down from 15% without an AI summary, the study found. Just 1% of users clicked on any link in that AI summary itself, rubbishing the argument that AI summaries are an effective way of sending users toward publishers’ content.

As always, if you have an interesting story of AI in Action, we’d love to hear it. Email us at: [email protected]

What We’re Reading

“How to Save OpenAI’s Nonprofit Soul, According to a Former OpenAI Employee,” by Jacob Hilton in TIME

Jacob Hilton, who worked at OpenAI between 2018 and 2023, writes about the ongoing battle over OpenAI’s legal structure—and what it might mean for the future of our world.

“The nonprofit still has no independent staff of its own, and its board members are too busy running their own companies or academic labs to provide meaningful oversight,” he argues. “To add to this, OpenAI’s proposed restructuring now threatens to weaken the board’s authority when it instead needs reinforcing.”