Click-bait and other attention-grabbing online content can cause brain rot in large language models, a new study finds.

getty

Brain rot isn’t just for humans anymore. The thoroughly modern affliction also impacts artificial intelligence, according to new research.

The term brain rot has become shorthand for how the endless consumption of trivial or unchallenging online content can dull human cognition — eroding focus, memory, discipline and social judgment. The phrase is so emblematic of our screen-fixated times that Oxford University Press named it word of the year in 2024.

Researchers from the University of Texas at Austin, Texas A&M University and Purdue University got to thinking: If the large language models we increasingly rely on for information are trained on the same firehose of sludge humans constantly consume, what does that mean for the tools’ own “brains”? They explore that question in a new study that appears as a preprint on research platform arXiv and is currently undergoing peer review.

What alarmed the researchers most is that the kind of viral or attention-grabbing text that typically trends online turns out to be cognitive junk for AI that leads to lapses in reasoning, factual inconsistencies and an inability to maintain logical coherence in longer contexts, among other detriments.

“The biggest takeaway is that language models mirror the quality of their data more intimately than we thought,” study co-authors Junyuan Hong and Atlas Wang said in a joint written response to my questions. “When exposed to junk text, models don’t just sound worse, they begin to think worse.” Hong is a postdoctoral fellow at UT Austin and an incoming assistant professor at the National University of Singapore, while Wang is an associate professor in the university’s department of electrical and computer engineering.

But How To Define ‘Junk Content’?

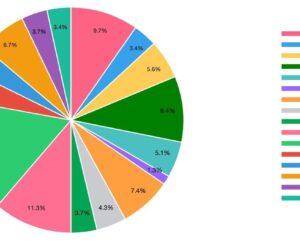

To test their “LLM Brain Rot Hypothesis,” the research team constructed “junk” and control datasets from social media platform X. The junk set included highly popular content engineered to grab attention with minimal information: click-bait threads, recycled meme commentary, posts positioned to spark outrage and algorithmically generated listicles.

Such content “looks clean and fluent, so traditional data quality classifiers think it’s fine, but it quietly degrades reasoning because it teaches models to mimic attention rather than understanding,” Hong and Atlas said.

They then trained LLMs including Meta’s open-source Llama3 and versions of Alibaba’s Qwen LLM on the junk — and observed resulting cognitive decay. Strikingly, the damage caused by the low-quality content had a lasting impact on the models.

“Even after extensive ‘rehabilitation’ on cleaner data, the degraded models never fully recovered,” the researchers reported. “That persistence means ‘AI brain rot’ isn’t just a temporary glitch. It’s a form of cognitive scarring. For users, that translates into models that appear fluent but reason shallowly — confident, yet confused.”

Former DeepMind Scientist Weighs In

Ilia Shumailov, a former Google DeepMind AI senior research scientist who was not involved with the study, isn’t surprised by the results, saying they align with academic literature on model poisoning. This term describes what happens when attackers manipulate AI training data, introducing vulnerabilities and biases for their own purposes.

“It is hard to extrapolate from small studies to what would happen at scale,” Shumailov said. “Most of the internet data is quite bad quality, yet clearly we can get very capable models. I read studies like this as cautionary tales that tell us that data we train on should be carefully checked.”

That’s already happening, notes Gideon Futerman, a special projects associate at the Center for AI Safety, a San Francisco-based nonprofit that promotes the safe development and deployment of artificial intelligence.

‘Cognitive Hygiene’ And The Future Of AI

“Leading AI corporations spend lots of effort trying to improve what data is used during training,” Futerman said, adding he’s more concerned about data poisoning than AI models being training with low-quality data. “Improvement on what pre-training data is used is one of the reasons that AI systems have been getting better.”

Hong and Wang call such training assessments “cognitive hygiene,” and say the future of AI safety may rely on the integrity of the data that shapes models, especially as more becomes itself AI-generated.

“Understanding this frontier will require deeper, more systematic study,” they said. “As online content becomes increasingly AI-synthetic and engagement-driven, future AI models also risk inheriting distortions in reasoning and representation embedded within that data.”