Last week, OpenAI’s video-generating app, Sora, achieved a milestone: it hit one million downloads in under five days, technically outpacing the launch of its sibling, ChatGPT.

According to data from Appfigures cited by TechCrunch, this surge happened despite Sora being invite-only and restricted to iOS users in the U.S. and Canada. For context, ChatGPT was publicly available and U.S.-only in its first week, making Sora’s explosive adoption a testament to the ferocious public appetite for generative video.

Sora has become, as The Verge describes it, “a TikTok for deepfakes.” Its feed is a surreal scroll of user-generated content powered by the Sora 2 model where anyone can create 10-second, photorealistic videos of anything they can imagine. The app’s standout feature, “cameos,” allows users to upload a selfie and create an AI double — a digital twin that can be inserted into any scenario, complete with a synthetic voice.

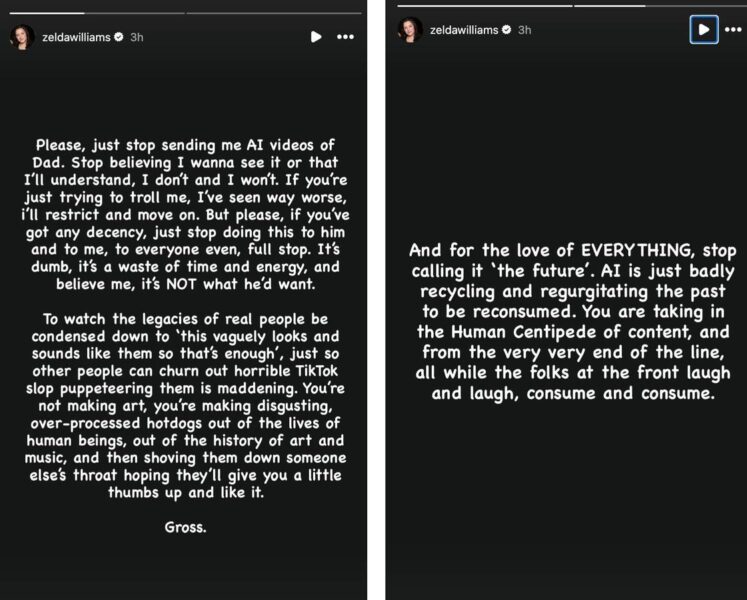

However, the internet is now awash with chaos. The platform’s mascot became its own CEO, Sam Altman, who appeared in countless user-generated videos “stealing, rapping, or even grilling a dead Pikachu.” More disturbingly, the tech opened several painful ethical wounds, such as with Zelda Williams, daughter of the late Robin Williams, who posted a plea requesting people to stop sending her AI-generated images of her father.

“If you’ve got any decency, just stop doing this to him and to me, to everyone even, full stop,” Williams shared. “To watch the legacies of real people be condensed down to ‘this vaguely looks and sounds like them so that’s enough’, just so other people can churn out horrible TikTok slop puppeteering them, is maddening.”

Zelda Williams deepfakes Instagram story

Zelda Williams / Tech Crunch

The public’s initial, unbridled enthusiasm for Sora’s capabilities has swiftly been met with more sober — if even sinister — realizations. The problem is also the lack of granular control: Early cameo settings were a blunt instrument, allowing users to choose if their AI double could be used by “mutuals,” “approved people,” or “everyone.” It was a system ripe for misuse, mocking and misinformation.

Over the weekend, OpenAI rolled out an update, and Sora’s head, Bill Peebles, announced new controls that allow users to dictate the behavior of their digital doubles. Users can now set boundaries for their cameo, preventing it from appearing in videos about politics, blocking it from saying certain words or creating aesthetic preferences. OpenAI staffer Thomas Dimson illustrated how users could program their AI self to always “wear a ‘#1 Ketchup Fan’ ball cap in every video.”

The proof of the pudding perhaps lies in the execution. The history of AI safety is a history of workarounds: ChatGPT itself has been coaxed into offering dangerous advice; Sora’s own feeble watermark has already been skirted.

Sora’s record-breaking download numbers prove that the demand for this technology is insatiable, while the subsequent backlash and rapid, knee-jerk deployment of control features prove that the industry and the public are navigating this uncharted territory in real-time — in effect, for the former, a behind-the-scenes scramble to build the guardrails as the rocket is already in flight.

While Peebles promises to “hillclimb on making restrictions even more robust,” the cat-and-mouse game between platform safeguards and user ingenuity is a fundamental, perhaps unwinnable war.