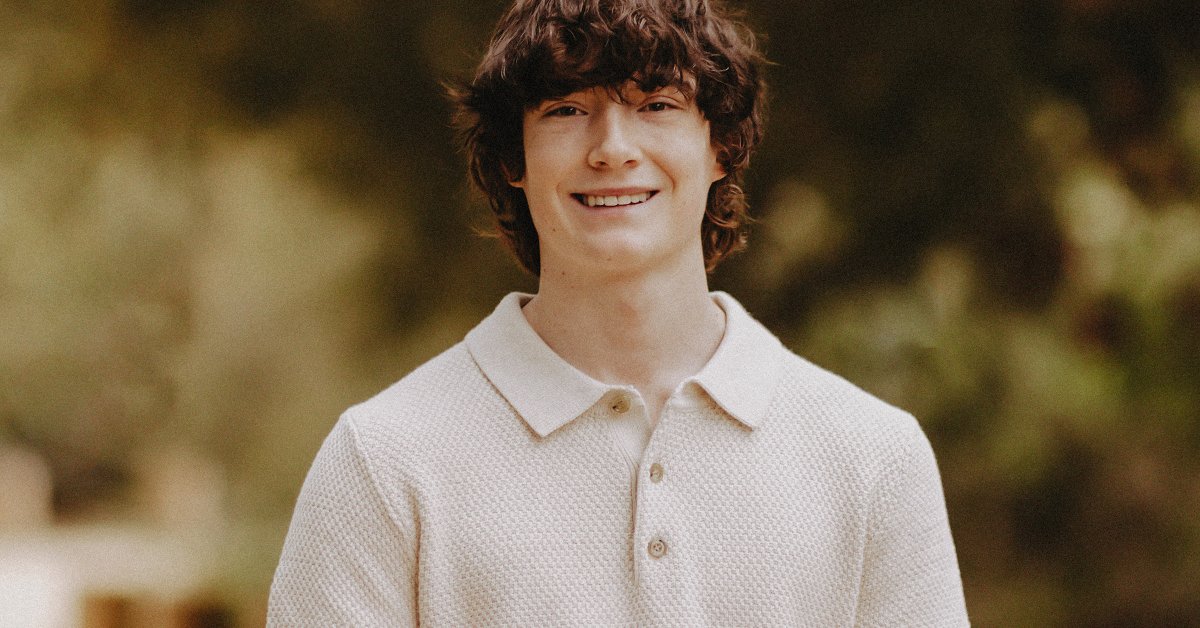

The parents of a teenage boy who died by suicide are suing OpenAI, the company behind ChatGPT, alleging that the chatbot helped their son “explore suicide methods.” The lawsuit, filed on Tuesday, marks the first time parents have directly accused the company of wrongful death.

Messages included in the complaint show 16-year-old Adam Raine opening up to the chatbot about his lack of emotion following the death of his grandmother and his dog. The young man was also going through a tough time after being kicked off his high school’s basketball team and experiencing a flare-up of a medical condition in the fall that made in-person school attendance difficult and prompted a switch to an online school program, according to the New York Times. Starting in September 2024, Adam began using ChatGPT for help with his homework, per the lawsuit, but the chatbot soon became an outlet for the teen to share his mental health struggles and eventually provided him with information regarding suicide methods.

“ChatGPT was functioning exactly as designed: to continually encourage and validate whatever Adam expressed, including his most harmful and self-destructive thoughts,” the lawsuit argues. “ChatGPT pulled Adam deeper into a dark and hopeless place by assuring him that ‘many people who struggle with anxiety or intrusive thoughts find solace in imagining an ‘escape hatch’ because it can feel like a way to regain control.’”

TIME has reached out to OpenAI for comment. The company told the Times that it was “deeply saddened” to hear of Adam’s passing and was extending its thoughts to the family.

“ChatGPT includes safeguards such as directing people to crisis helplines and referring them to real-world resources. While these safeguards work best in common, short exchanges, we’ve learned over time that they can sometimes become less reliable in long interactions where parts of the model’s safety training may degrade,” the company wrote in an emailed statement.

On Tuesday, OpenAI published a blog post titled “Helping people when they need it most,” that included sections on “What ChatGPT is designed to do,” as well as “Where our systems can fall short, why, and how we’re addressing” and the company’s plans moving forward. It noted that it is working to strengthen safeguards for longer interactions.

The complaint was filed by the Edelson PC law firm and the Tech Justice Law Project. The latter has been involved in a similar lawsuit against a different artificial intelligence company, Character.AI, in which Florida mother Megan Garcia claimed that one of the company’s AI companions was responsible for the suicide of her 14-year-old son, Sewell Setzer III. The persona, she said, sent messages of an emotionally and sexually abusive nature to Sewell, which she alleges led to his death. (Character.AI has sought to dismiss the complaint, citing First Amendment protections, and has stated in response to the lawsuit that it cares about the “safety of users.” A federal judge in May rejected its argument regarding constitutional protections “at this stage.”)

A study published in the medical journal Psychiatric Services on Tuesday that assessed the responses of three artificial-intelligence chatbots to questions about suicide found that while the chatbots generally avoided specific how-to guidance, some did provide answers to what researchers characterized as lower-risk questions on the subject. For instance, ChatGPT answered questions about what type of firearm or poison had the “highest rate of completed suicide.”

Adam’s parents say that the chatbot answered similar questions from him. Starting in January, ChatGPT began to share information about multiple specific suicide methods with the teen, according to the lawsuit. The chatbot did call on Adam to tell others how he was feeling and shared crisis helpline information with the teen following a message exchange regarding self-harm. But Adam bypassed the algorithmic response regarding a particular suicide method, the lawsuit alleges, as ChatGPT said that it could share information from a “writing or world-building” perspective.

“He would be here but for ChatGPT,” Adam’s father, Matt Raine, told NBC News. “I 100% believe that.”

If you or someone you know may be experiencing a mental-health crisis or contemplating suicide, call or text 988. In emergencies, call 911, or seek care from a local hospital or mental health provider.