Can AI be used to make the criminal justice system more fair and efficient, or will it only reinforce harmful biases? Experts say that it has so far been deployed in worrying ways—but that there is potential for positive impact.

Today, AI tech has reached nearly every aspect of the criminal justice system. It is being used in facial recognition systems to identify suspects; in “predictive policing” strategies to formulate patrol routes; in courtrooms to assist with case management; and by public defenders to cull through evidence. But while advocates point to an increase in efficiency and fairness, critics raise serious questions around privacy and accountability.

Last month, the Council on Criminal Justice launched a nonpartisan task force on AI, to study how AI could be used in the criminal justice system safely and ethically. The group’s work will be supported by researchers at RAND, and they will eventually take their findings and make recommendations to policymakers and law enforcement.

“There’s no question that AI can yield unjust results,” says Nathan Hecht, the task force’s chair and a former Texas Supreme Court Chief Justice. “This task force wants to bring together tech people, criminal justice people, community people, experts in various different areas, and really sit down to see how we can use it to make the system better and not cause the harm that it’s capable of.”

Risks of AI in law enforcement

Many courts and police departments are already using AI, Hecht says. “It’s very piecemeal: Curious people going, ‘Oh, wow, there’s this AI out here, we could use it over in the criminal court.”

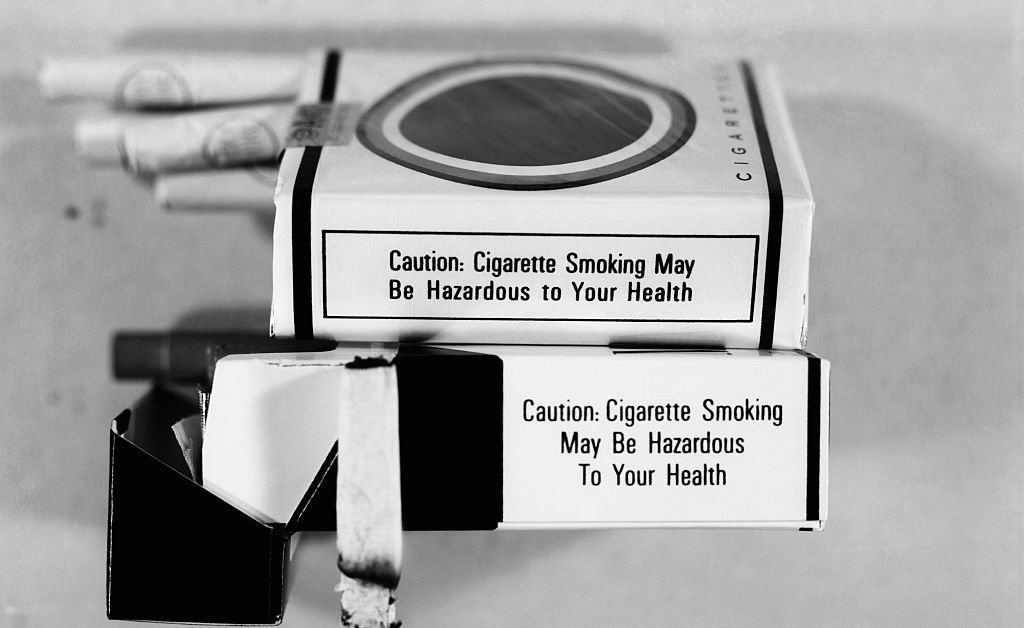

But because there are few standards for how to deploy AI, civil rights watchdogs have grown concerned that law enforcement agencies are using it in dangerous ways. Thousands of agencies have come to rely upon facial recognition technology sold by companies like Clearview, which hosts a database of billions of images scraped off the internet. In many databases, Black people are overrepresented, in part because they live in communities that are overpoliced. AI technology is also worse at discerning differences in Black people’s faces, which can lead to higher misidentification rates.

Last year, the Innocence Project, a legal nonprofit, found that there have been at least seven wrongful arrests from facial recognition technology, six of which involved wrongfully accused Black people. Walter Katz, the organization’s director of policy, says that police sometimes make arrests solely based on AI’s facial recognition findings as opposed to having the AI serve as a starting point for a larger investigation. “There’s an over-reliance on AI outputs,” he says.

Katz says that when he went to a policing conference last fall, “it was AI everywhere.” Vendors were aggressively hawking technology tools that claimed to solve real problems in police departments. “But in making that pitch, there was little attention to any tradeoffs or risks,” he says. For instance, critics worry that many of these AI tools will increase surveillance of public spaces, including the monitoring of peaceful protesters—or that so-called “predictive policing” will intensify law enforcement’s crackdowns on over-policed areas.

Where AI could help

However, Katz concedes that AI does have a place in the criminal justice system. “It’ll be very hard to wish AI away—and there are places where AI can be helpful,” he says. For that reason, he joined the Council on Criminal Justice’s AI task force. “First and foremost is getting our arms wrapped around how fast the adoption is. And if everyone comes from the understanding that having no policy whatsoever is probably the wrong place to be, then we build from there.”

Hecht, the task force’s chair, sees several areas where AI could be helpful in the courtroom, for example, including improving the intake process for arrested people, or helping identify who qualifies for diversion programs, which allow offenders to avoid convictions. He also hopes the task force will provide recommendations on what types of AI usage explicitly should not be approved in criminal justice, and steps to preserve the public’s privacy. “We want to try to gather the expertise necessary to reassure the users of the product and the public that this is going to make your experience with the criminal justice system better—and after that, it’s going to leave you alone,” he says.

Meanwhile, plenty of other independent efforts are trying to use AI to improve the justice processes. One startup, JusticeText, hopes to use AI to narrow the gap between resources of prosecutors and public defenders, the latter of whom are typically severely understaffed and underresourced. JusticeText built a tool for public defenders that sorts through hours of 911 calls, police body camera footage, and recorded interrogations, in order to analyze it and determine if, for example, police have made inconsistent statements or asked leading questions.

“We really wanted to see what it looks like to be a public defender-first, and try to level that playing field that technology has in many ways exacerbated in past years,” says founder and CEO Devshi Mehrotra. JusticeText is working with around 75 public defender agencies around the country.

Recidiviz, a criminal justice reform nonprofit, has also been testing several ways of integrating AI into their workflows, including giving parole officers AI-generated summaries of clients. “You might have 80 pages of case notes going back seven years on this person that you’re not going to read if you have a caseload of 150 people, and you have to see each one of them every month,” says Andrew Warren, Recidiviz’s co-founder. “AI could give very succinct highlights of what this person has already achieved and what they could use support on.”

The challenge for policymakers and the Council on Criminal Justice’s task force, then, is to determine how to develop standards and oversight mechanisms so that the good from AI’s efficiency gains outweigh its ability to amplify existing biases. Hecht, at the task force, also hopes to protect from a future in which a black box AI makes life-changing decisions on its own.

“Should we ensure our traditional ideas of human justice are protected? Of course. Should we make sure that able judges and handlers of the criminal justice system are totally in control? Of course,” he says. “But saying we’re going to keep AI out of the justice system is hopeless. Law firms are using it. The civil justice system is using it. It’s here to stay.”