Therapists are starting to counsel those who are experiencing AI psychosis as a mental health condition.

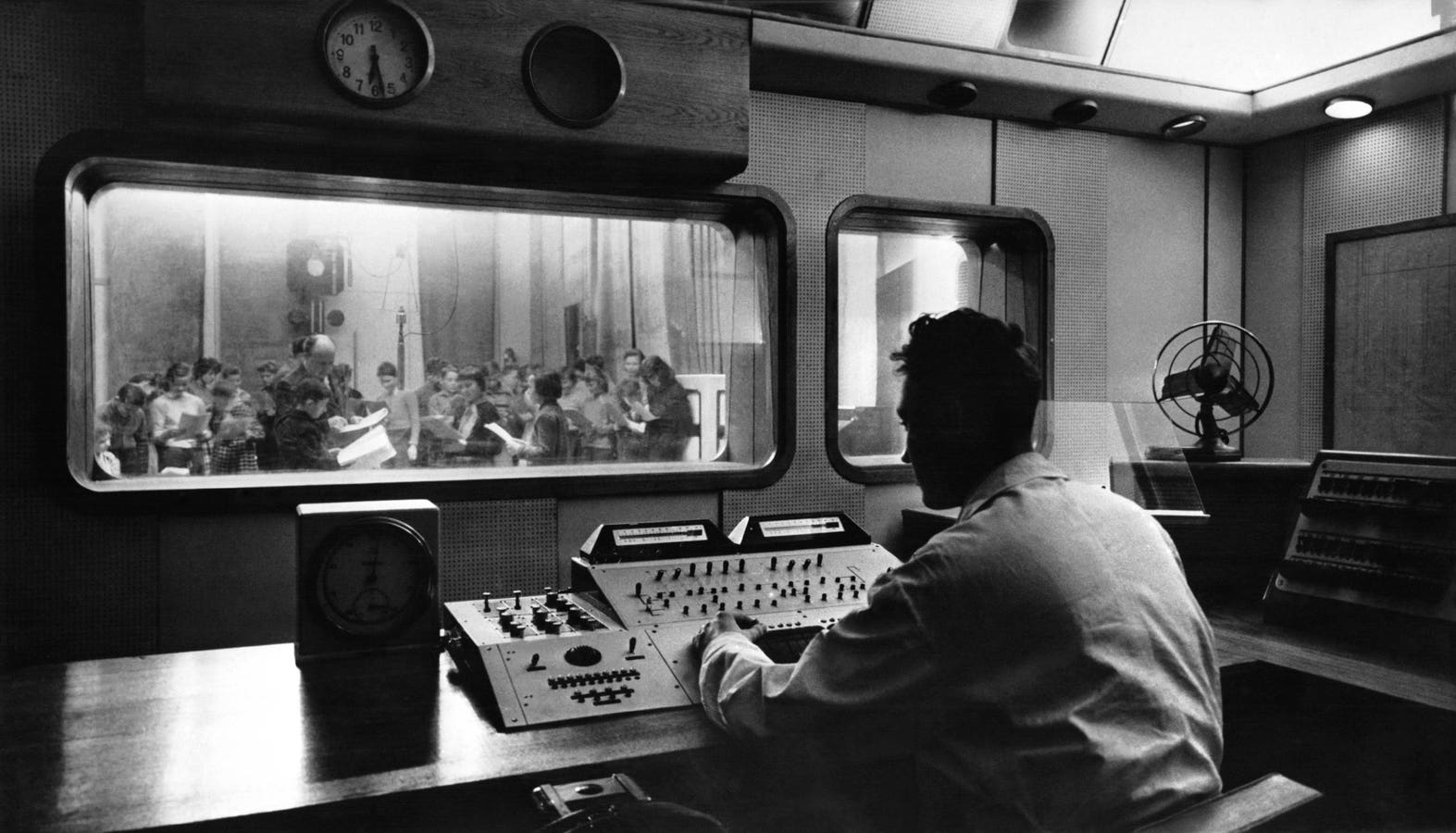

getty

In today’s column, I examine the advent of therapists opting to provide therapy that is specifically for people who are potentially experiencing AI psychosis.

This is actually a somewhat contentious consideration. Here’s why. There is much heated debate about whether AI psychosis is a suitable focus for therapy since it isn’t an officially defined clinical mental disorder. Some even might assert that AI psychosis is basically a made-up catchphrase and that there is nothing new about the claimed phenomenon, namely that conventional therapy is more than sufficient to encompass the matter at hand.

Let’s talk about it.

This analysis of AI breakthroughs is part of my ongoing Forbes column coverage on the latest in AI, including identifying and explaining various impactful AI complexities (see the link here).

AI And Mental Health

As a quick background, I’ve been extensively covering and analyzing a myriad of facets regarding the advent of modern-era AI that involves mental health aspects. The evolving advances and widespread adoption of generative AI have principally spurred this rising use of AI. For a quick summary of some of my posted columns on this evolving topic, see the link here, which briefly recaps about forty of the over one hundred column postings that I’ve made on the subject.

There is little doubt that this is a rapidly developing field and that there are tremendous upsides to be had, but at the same time, regrettably, hidden risks and outright gotchas come into these endeavors too. I frequently speak up about these pressing matters, including in an appearance last year on an episode of CBS’s 60 Minutes, see the link here.

Emergence Of AI Psychosis

There is a great deal of widespread angst right now about people having unhealthy chats with AI. Lawsuits are starting to be launched against various AI makers. The concern is that whatever AI safeguards might have been put in place are insufficient and are allowing people to incur mental harm while using generative AI.

The catchphrase of AI psychosis has arisen to describe all manner of trepidations and mental maladies that someone might get entrenched in while conversing with generative AI. Please know that there isn’t any across-the-board, fully accepted, definitive clinical definition of AI psychosis; thus, for right now, it is more of a loosey-goosey determination.

Here is my strawman definition of AI psychosis:

- AI Psychosis (my definition): “An adverse mental condition involving the development of distorted thoughts, beliefs, and potentially concomitant behaviors as a result of conversational engagement with AI such as generative AI and LLMs, often arising especially after prolonged and maladaptive discourse with AI. A person exhibiting this condition will typically have great difficulty in differentiating what is real from what is not real. One or more symptoms can be telltale clues of this malady and customarily involve a collective connected set.”

For an in-depth look at AI psychosis and especially the co-creation of delusions via human-AI collaboration, see my recent analysis at the link here.

Arguing About AI Psychosis

An acrimonious debate is underway about the nature of AI psychosis.

First, AI psychosis cannot be found in the DSM-5 guidebook, which is a vital source of formerly defined and researched mental health disorders, see my coverage at the link here. The DSM-5 encompasses insightful and carefully crafted guidelines for therapists, psychologists, psychiatrists, cognitive scientists, and other mental health professionals. Examples of cited mental disorders in DSM-5 include depression, dissociative disorders, eating disorders, bipolar disorders, personality disorders, and so on.

The fact that AI psychosis is not included in the DSM-5 can be used as a sign or signal that AI psychosis is merely an alleged mental disorder. It has not been rigorously assessed and decreed as a validated mental disorder. In that sense, some therapists are of the mindset that until or if AI psychosis gets official recognition, which it might never see happen, this is nothing more than a non-official mishmash.

Second, there is a hunch that AI psychosis might simply be a fad. It is certainly a hot topic at this time; no one can dispute that aspect. Nonetheless, we will seemingly move on past the AI psychosis topic in a short while, and the whole concept will be ultimately set aside. A few years from now, the catchphrase will probably be a part of ancient history.

Third, AI psychosis is thought by some to be fully encompassed by already existing mental disorders. There isn’t a need to explicitly call out AI psychosis as its own brand. The matter is simply a new name slapped onto an old bottle. If you already cover the mental disorders in DSM-5, you’ve got all that you need to know to contend with the so-called AI psychosis topic.

Other Side Of The Coin

Whoa, some other therapists are saying, don’t be in such a rash rush to make declarative judgments.

They retort that just because AI psychosis is not in DSM-5 or other official publications, it doesn’t mean that we must wait for the desired or preferred formalization to catch up with day-to-day reality. It takes gobs of time for a mental disorder to be researched. It takes extensive time for the research to be codified into official documents. Meanwhile, you cannot sit on your hands and wait for official proclamations.

There is a brewing need right now. Therapists are in the right place at the right time to help society and the general populace on the rising tide of AI psychosis. If therapists don’t step up to the task, you are throwing in the towel while potentially many people are suffering.

Furthermore, this isn’t a here-and-gone fad. No way. OpenAI says that nearly 700 million people are active weekly users of ChatGPT, and if you add the other users for competing products such as Claude, Gemini, Llama, Grok, etc., the total count is likely in the billions. Of those global users, there is a portion that are finding themselves slipping and sliding into AI psychosis (see my analysis of the at-scale population impacts, see the link here).

The problem is going to grow as the already frenetic adoption of AI grows. There isn’t any turning back. The sooner we grapple with AI psychosis, the better. Try to nip as much of it in the bud as we can. Perhaps slowing the pace at which it is expanding.

Therapists must step up to the plate and actively engage in providing suitable mental health care for AI psychosis.

AI Psychosis As Old Or New

The toughest question is whether AI psychosis is nothing new and can already be encompassed by existing mental disorders.

One logic is that anyone claiming to have AI psychosis can instantly be reclassified into already known mental disorder classifications. For example, a person who believes aliens from outer space are here on Earth, and that generative AI is reinforcing that delusion, they are experiencing a mental disorder regarding delusions. You can take the AI out of the equation. The person is potentially delusional. That’s it.

A counterpoint to this omission of the AI element is that a therapist must see their client or patient in a holistic fashion. The AI is partaking in the co-creation of the delusion. If the client or patient were being avidly enabled by a fellow human, a therapist would almost certainly want to find out why and how this occurs. Trying to aid the patient or client without understanding the external forces pushing the delusion would be a grievous omission.

It goes the same for the AI element.

Therapists And Knowledge Of AI

Plus, if a therapist is unfamiliar with generative AI, they are going to have a devil of a time aiding their patient or client.

The therapist would not grasp why the person is so gravitationally pulled toward AI engagement. The therapist would be unable to realistically envision ways that AI usage could be better managed or possibly mitigated. Indeed, the worry is that a therapist who is unfamiliar with how AI works would think they can merely tell the person to stop using AI.

Boom, that prevents the true underlying disorder of being delusional from being propagated by the AI. Thus, that part of the problem has been solved. Nice.

But banning the use of AI is a bit of an oversimplification. AI usage is gradually becoming part and parcel of daily living. Waving a magic wand and instructing a client to avoid AI at all costs, well, that could be a costly endeavor to the person and potentially a near impossibility.

The point that is being made would be that AI psychosis as a moniker provides clarity that AI is an instrumental component in the mental health condition of the client or patient. And whatever therapy is going to be provided will need to acknowledge and encapsulate what can be realistically undertaken regarding the AI reliance that the person is having.

Therapists Providing AI Insights

Therapists who are opting to raise their hand and offer AI psychosis related therapy are preparing themselves so that they can incorporate the added nuances involved.

For example, during therapy, the therapist might bring up the nature of how AI works. This can be essential to overcome the potential magical thinking that a patient has about AI. Some people seem to assume that AI is far beyond our world, and not just computers, data, and algorithms — see my analysis of how low AI-literacy impacts the populace, at the link here.

By shedding light on what AI actually consists of, this can be instrumental in contending with AI psychosis.

Therapy often includes a hint of psychoeducation, whereby the therapist explains aspects of psychology and how mental disorders can arise. In that same vein, when dealing with AI psychosis, the therapist might perform a semblance of AI technological literacy. The therapist has to be versed sufficiently in AI to appropriately convey the mechanics of how AI works.

Weaning From AI

Another strategy that might be employed by a therapist involves setting up behavioral experiments to aid in reducing AI usage and AI reliance. Some refer to this as digital hygiene routines. This has been done for those who are embroiled with the use of the Internet, or the use of their smartphone, and seems to equally apply to AI usage.

For example, a therapist might instruct their patient or client to use AI in prespecified ways. The person is told they can use AI to ask questions on an everyday basis. How can I change the oil in my car? What’s the best way to cook an egg? And so on.

The person is not to veer into whatever matters that their AI psychosis seems to be based upon. If the person has been dialoging about outer space aliens, they are instructed to no longer carry on those conversations with AI. The idea is to inch the person away from the AI being a co-creator.

In addition, the therapist might recommend ways to curtail the AI from pursuing the out-of-sorts topics. This might include providing custom instructions or prompts that a person can enter into their AI. Those prompts might serve to reduce the AI’s default tendencies and keep the AI from going down rabbit holes with the person.

You might cheekily say that the therapist is advising both the human and the AI, basically dealing with two kinds of patients at the same time.

Using Preferred AI

An even newer angle is that a therapist purposely includes AI into the therapeutic process. I’ve predicted that we are departing from the classic dyad of therapist-patient and making our way to a triad consisting of therapist-AI-patient, see the link here. Therapists are overtly adding AI into their practice as a therapeutic tool.

Here’s how that might work in the realm of AI psychosis.

Rather than having the patient or client use their own preferred AI, the therapist gives them an AI login to an AI system that the therapist has arranged to make use of. This AI might contain stronger AI safeguards than off-the-shelf AI. The therapist would also be able to have access to the conversations taking place, assuming they’d set this up and informed the patient accordingly.

The therapist can do a much closer job of gauging how the person is reacting and interacting with AI. This is not ironclad since the person might opt to continue using their other AI, but if undertaken astutely, the therapist can definitely gain a leg up on understanding the role that AI is having on the person.

One emerging complication is that some states are enacting laws that prohibit the use of AI for mental health uses, even when being undertaken under the supervision of a mental health professional. For my critical review of these new laws, see the link here and the link here. Therapists going the route of AI usage will need to be mindful of the latest laws and regulations pertaining to AI usage.

The False Debate

A means of trying to short-circuit a sensible debate on AI psychosis is to take the position that if you acknowledge such a thing as AI psychosis, you are somehow tossing out all the rest of mental health and mental health disorders. This is a crude and unreasonable attempt to make the discussion into an all-or-nothing affair.

It usually goes like this. You either believe in AI psychosis as a malady and supposedly reject all other mental health considerations, or you summarily reject the AI psychosis premise and embrace the known and well-established domain of mental health care.

Hogwash.

Savvy therapists can chew gum and walk at the same time. They retain all their usual practices and expertise when it comes to existing mental health considerations. In addition, they are able to add on top by getting up to speed about AI. They have opted to add a newly emerging layer to their therapeutic services.

Those therapists who don’t want to assume that AI has anything to do with therapy can continue as they are. Best of luck to them. The problem they are going to increasingly face is that clients or patients are going to bring AI to their door and therapy sessions. More and more, people are coming into the therapist’s office with ChatGPT-generated mental health advice and wanting to have their therapist comment on the AI-provided guidance (see my discussion on this at the link here).

AI is the 600-pound gorilla in the therapy room, whether it is welcomed or not.

Times Are Changing

Is AI a curse or a blessing when it comes to population-wide mental health?

The disconcerting answer is that nobody knows. In many ways, it seems possible that AI will boost mental health. Yet, in a dual-use conundrum, AI can also undermine mental health. We are in the midst of a worldwide wanton experiment. It is occurring on a breathtaking scale at a breathtaking pace.

A final thought for now.

Famed ethnobotanist Terence McKenna made this remark: “The most beautiful things in the universe are inside the human mind.” We need to make sure AI doesn’t mess up that beauty. Therapists have a big part to play in keeping human minds healthy and sound, especially during the outstretched Wild West adoption of contemporary AI.